Some tips on the empirical analysis of algorithms

Introduction

Last November, Magnus Lie Hetland's book appeared, entitled “Python Algorithms: Mastering Basic Algorithms in the Python Language”. The author has been programming for many years and now he is reading a course in the theory of algorithms at a Norwegian university. In his book, he explains with quite simple words methods of constructing and analyzing algorithms, and also gives many examples oriented to Python programmers. The author focuses on a practical approach to the construction and optimization of solutions of various algorithmic problems. One review says that this book can be compared with the classic work of Cormen.

Tanenn and I are gradually translating this book, and I bring to your attention the translation of the part of the first chapter - "Empirical Evaluation of Algorithms".

Empirical evaluation of algorithms

')

This book describes the design of algorithms (and the closely related analysis of algorithms). But in the development there is also another important process, vital for creating large real-world projects, this is the optimization of algorithms. From the point of view of this separation, the design of the algorithm can be viewed as a way to achieve low asymptotic complexity of the algorithm (through the development of an efficient algorithm), and optimization as a reduction of the constants hidden in this asymptotics.

Although I can give you some tips on designing algorithms in Python, it’s hard to guess exactly which tricks and tricks will give you better performance for a particular task you are working on or for your equipment and version of Python. (Asymptotics are used just so that there is no need to resort to such things). In general, in some cases, tricks may not be required at all, because your program is already quite fast. The simplest thing you can do in most cases is just to try and watch. If you have an idea of a hack, just try it out! Implement your trick and run a few tests. Are there any improvements? And if this change makes your code less readable, and the performance gain is small, then consider - is it worth it?

Although the theoretical aspects of the so-called experimental algorithms (that is, the experimental evaluation of algorithms and their implementation) are beyond the scope of this book, I will give you some practical tips that can be very useful.

Tip 1. If possible, do not worry about it.

Concern about asymptotic complexity can be very helpful. Sometimes the solution to the problem due to the complexity in practice may cease to be so. However, constant constants in asymptotics are often not critical at all. Try a simple implementation of the algorithm to begin with, and make sure that it works stably. If it works, do not touch it.

Tip 2. To measure work time, use timeit.

The timeit module is designed to measure running time. Although you will need to do a lot of work to get really reliable data, for practical purposes timeit will be fine. For example:

>>> import timeit >>> timeit.timeit("x = 2 + 2") 0.034976959228515625 >>> timeit.timeit("x = sum(range(10))") 0.92387008666992188 The timeit module can be used directly from the command line, for example:

$ python -m timeit -s"import mymodule as m" "m.myfunction()" There is something that you need to be careful with when using timeit: side effects that will affect the re-execution of timeit. The timeit function will run your code several times to increase accuracy, and if past runs affect subsequent ones, you will be in a difficult position. For example, if you measure the execution speed of something like mylist.sort (), the list will be sorted for the first time only. During the remaining thousands of launches, the list will already be sorted and this will give an unrealistically small result.

More information about this module and how it works can be found in the documentation of the standard Python library .

Tip 3. To find bottlenecks, use a profiler.

Often, in order to understand which parts of the program require optimization, various guesses and assumptions are made. Such assumptions are often wrong. Instead of guessing, use a profiler. The standard Python distribution comes with several profilers, but cProfile is recommended. They are as easy to use as timeit, but it gives more detailed information about what time is spent on when executing the program. If the main function of your program is main, you can use the profiler as follows:

import cProfile cProfile.run('main()') This code will display a report on the operating time of various functions of the program. If your system does not have a cProfile module, use profile instead. More information about these modules can be found in the documentation. If you are not so interested in the implementation details, but just need to evaluate the behavior of the algorithm on a specific data set, use the trace module from the standard library. With it, you can count the number of times an expression or a call will be executed in a program.

Tip 4. Show results graphically

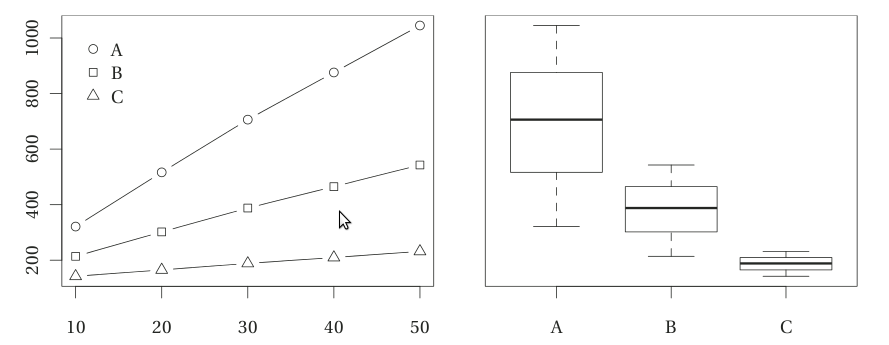

Visualization can be a wonderful way to understand what's what. For performance studies, graphs are often used (for example, to estimate the relationship between the amount of data and execution time) and “box with mustache” diagrams showing the distribution of time over several runs. Examples can be seen in the figure.

An excellent library for building graphs and diagrams from Python is matplotlib (available at http://matplotlib.sf.net ).

Tip 5. Be careful in conclusions based on comparison of lead times.

This advice is rather vague, because there are many traps that can be reached by drawing conclusions about which way is better, based on a comparison of the work time. First, any difference you see can be determined by chance. If you use special tools like timeit, the risk of such a situation is less because they repeat the measurement of the time to evaluate the expression several times (or even repeat the whole measurement several times, choosing the best result). Thus, there will always be random errors and if the difference between the two implementations does not exceed a certain error, one cannot say that these implementations are different (although it is also impossible to say that they are the same).

The problem is compounded if you compare more than two implementations. The number of pairs for comparison increases in proportion to the square of the number of compared versions, greatly increasing the likelihood that at least two of the versions will appear slightly different. (This is called the problem of multiple comparisons). There are statistical solutions to this problem, but the easiest way is to repeat the experiment with two suspicious versions. You may need to do this several times. Do they still look alike?

Secondly, there are several points that need to be paid attention to when comparing average values. At a minimum, you should compare real-time averages. Usually, in order to get exponential numbers when measuring performance, the running time of each program is normalized by dividing by the execution time of a standard, simple algorithm. Indeed, this may be useful, but in some cases it will make the results of measurements meaningless. A few useful tips on this topic can be found in the article Fleming and Wallace. You can also read Bast and Weber's “Don't compare averages,” or a newer article by Citron et al. " The harmonic or geometric mean: does it really matter? ".

And thirdly, perhaps your conclusions cannot be generalized. Similar measurements on a different input data set and on a different gland may give different results. If someone will use the results of your measurements, you must consistently document how you received them.

Tip 6. Be careful when drawing conclusions about the asymptotics from experiments.

If you want to say something definitively about the asymptotics of the algorithm, then you need to analyze it, as described earlier in this chapter. Experiments may give you hints, but they are obviously carried out on finite data sets, and asymptotics are what happens with arbitrarily large data sizes. On the other hand, unless you work in an academic field, the goal of asymptotic analysis is to draw some conclusion about the behavior of an algorithm implemented in a particular way and running on a specific data set, which means that the measurements must be appropriate.

Suppose you assume that the algorithm works with quadratic complexity, but you cannot definitively prove it. Can you use experiments to prove your guess? As already mentioned, experiments (and optimization of algorithms) deal mainly with constant coefficients, but there is a solution. The main problem is that your hypothesis is actually untestable experimentally. If you claim that the algorithm has complexity O (n²), then the data can neither confirm nor deny it. However, if you make your hypothesis more specific, it will become testable. You could, for example, based on some data, assume that the running time of a program will never exceed 0.24n² + 0.1n + 0.03 seconds in your environment. This is a testable (more precisely, refuted) hypothesis. If you made a lot of measurements, but you still cannot find counter-examples, then your hypothesis may be true. And this already confirms the hypothesis of the quadratic complexity of the algorithm.

Conclusion

The book itself can be ordered, for example, on Amazon .

Next in line for translation are chapters on presentation and work with graphs and trees. The author describes the practical aspects of working effectively with these data structures using Python tools.

Source: https://habr.com/ru/post/111858/

All Articles