Building video delivery systems based on HTTP Dynamic Streaming from Adobe and OpenSource

As part of the project, one of our customers once again faced the task of building a system for converting / storing / delivering video to the Internet. A typical such task is to create your own small (or not very small) “Tube” only with professional, not UGC-content.

Since the creation of the first "Tube" video technologies on the Internet have gone some way of development, they now allow to do much more, and the requirements for a modern video site have become somewhat different.

The most interesting recent trends, in our opinion, are:

As a result of the recent emergence and rapid spread of mobile devices that are really convenient for the use of the Internet from them, as well as the development of wireless data transmission technologies, it becomes clear that you need to have a second version of the site optimized for mobile iOS, Android and other platforms. The third version of the site, optimized for the “10-foot interface”, will soon be needed when any WebTV technology, such as GoogleTV, becomes popular.

')

It all fits into the so-called. the concept of “three screens”, from which people will consume video (and generally Internet content) in the near future - a mobile phone (tablet) on the road, a PC at the office at work, TV in the living room at home.

I would like to talk about the technology of adaptive HTTP streaming in more detail, which is the subject of this article.

The Internet, by its nature, is poorly suited for video delivery, especially in real time. On the one hand, we want to see a smooth continuous stream of video; on the other hand, we have an unstable connection with characteristics floating in time and we can deal with it in two ways - by increasing the delay (buffer) or adapting the bitrate of the stream.

The main idea of adaptive multi-bit streaming technology lies precisely in stream adaptation — that we encode one video in several bitrates and stream the user to the bit rate that we consider possible at a given time, for example, based on the assessment of the current throughput of the user's network and evaluation decoding speeds (i.e. whether the user's computer is coping with decoding the stream in real time).

First of all, it is important for live broadcasts, when it is more important for the user to “keep up with the stream,” that is, minimizing the likelihood of “prebuffering”, even if at times sacrificing image quality. However, for video-on-demand services, this technology is also extremely useful - it’s nice when the video starts quickly, in the capacity that the channel allows and by all means tries not to stumble.

In practice, this means that we can significantly improve the perception of the service not only to users with an unstable / weak channel, somewhere deep in the regions, but also to users of wifi networks, users who have one connection common to several users in a household, and t .d For users with a dedicated quality channel, this technology will simply automatically determine the speed of its channel and will give the video in a suitable bitrate - i.e. the end user no longer needs to know what 360p, 240p or SD is, HD, etc. - everything happens automatically.

Pay for all these benefits accounted for 3 things -

The first reason at the moment should no longer be taken into account, since there are already ready opensource - building blocks for the construction of such systems, which I will talk about later.

The second and third reasons, of course, are important, but here the developers of the site should themselves consider whether their users are worth these additional costs.

At the moment there are at least three implementations of adaptive multibitrate streaming technology known to me:

For the practice of developing video sites, the most important, of course, is the implementation from Adobe, since Apple HTTP Adaptive Streaming works only for iOS devices and Safari under MacOS X (although I was recently shown one STB in which this protocol was implemented), and Silverlight, let's say, until I received such popularity as Flash.

Adobe implemented Dynamic Streaming within the framework of the rtmp protocol for quite some time and only relatively recently (with the advent of flash 10.1) was it possible to use HTTP streaming. This is a very important step, since previously a specialized streaming server was needed to use dynamic streaming, but now most of the work has been transferred to the client, and server support is simplified to the point of returning normal HTTP statics.

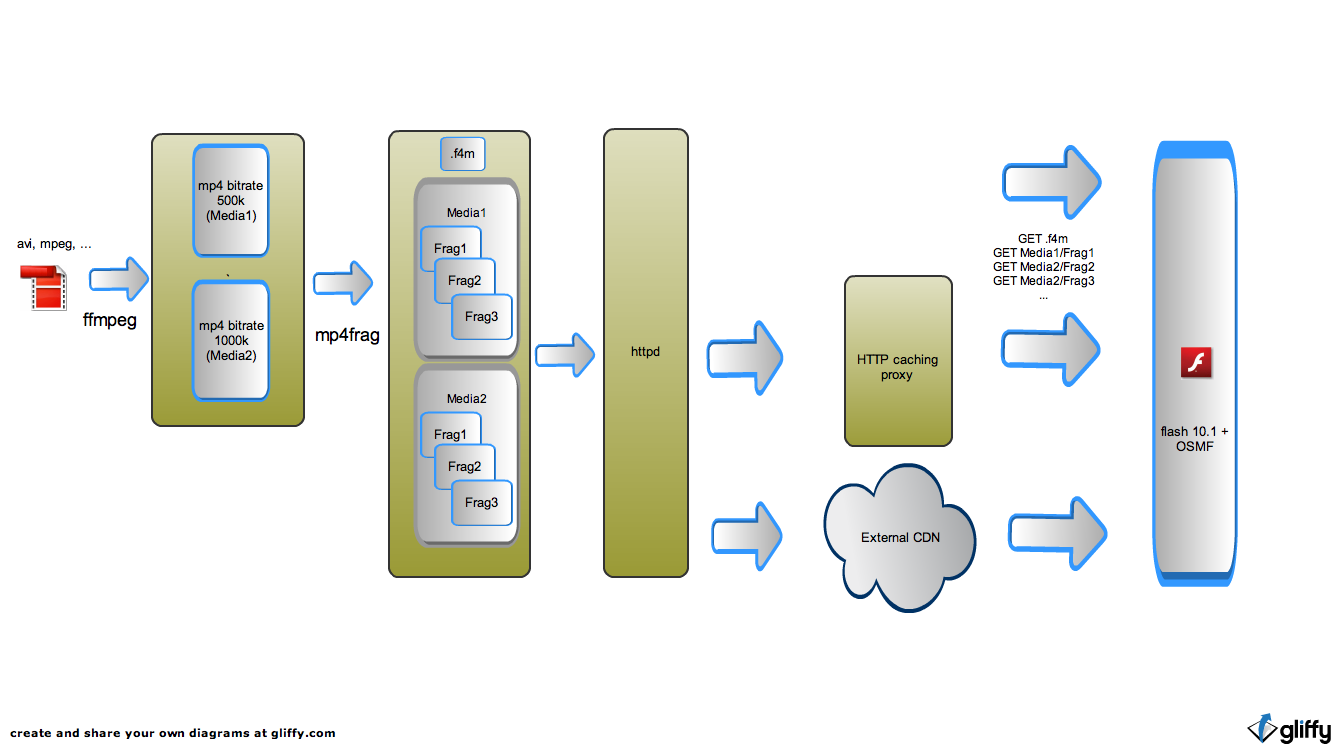

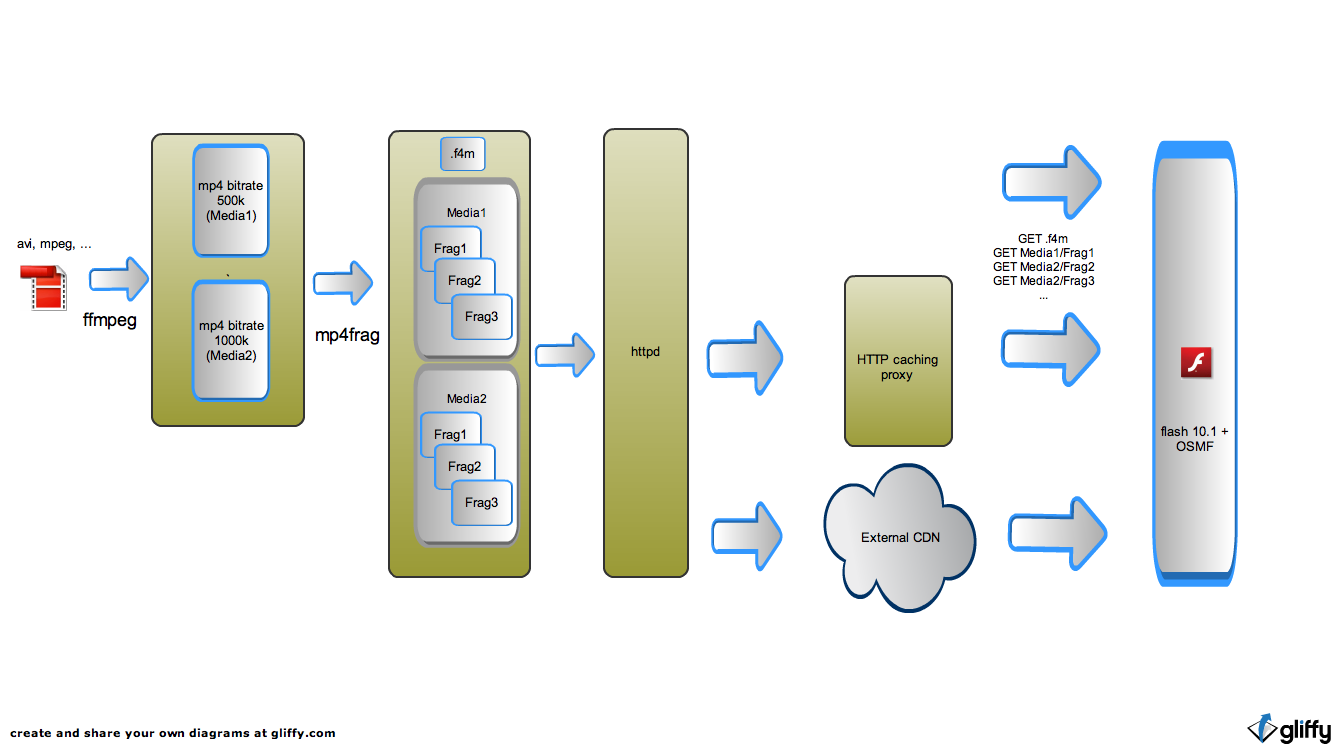

In fact, we need to encode the video in several bitrates, and then either cut it into fragments, for example, 5 seconds each and put it under the fast statics return server (see the picture below), or if there is a server or streaming module, which is from mp4 files can cut the necessary fragments on the fly, then we put it on the storage level, and we route the requests through some effective cache. Moreover, this casher can be at the CDN service provider. One of the main advantages of the adaptive HTTP streaming technology is the ease of use of external CDNs and cachers - you can not care about the prefilling of the cache - in the case of “cache miss” the request is proxied to the origin-server, while being saved in the cache.

Caching is very effective, because in the proxy cache, the demanded content “slices” into pieces. Moreover, these pieces are relatively small and you can come up with different strategies for managing the cache - when to “save” a piece, when to delete it, etc. This gives more freedom to develop optimization mechanisms for developers of CDN services. At one time, for the first versions of the rutub delivery system, we developed a similar piecewise caching mechanism, which allowed the project to deliver video efficiently with a minimum of equipment for a long time.

From a programming point of view, for the support of HTTP Dynamic Streaming, the guys from Adobe did one simple thing - the appendBytes method appeared for the NetStream object. The format of the input array is byte stream in FLV format. In fact, this makes it possible to play byte stream, and the issues of its delivery is a separate task. For example, you can deliver these bytes via HTTP - pieces and you get HTTP streaming.

Systems from Apple and Microsoft implement the delivery and playback system inside their “black box”, and Adobe allows you to program the system yourself. They also made an open implementation of such a system as part of their Open Source Media Framework. OSMF is a set of base classes that simplify the writing of video players in ActionScript3, which, among other things, includes the implementation of HTTP dynamic streaming support. Moreover, the specifications of this protocol and details of the implementation of Adobe is trying to disclose. You can see here and here . Can you imagine such a thing earlier, when Flash had no competitors and their future seemed completely cloudless?

So, back to the formulation of the problem - we want to make a video hosting service, the desktop version will use flash and HTTP adaptive streaming technology.

How to build such a system for converting and delivering video?

We will encode in mp4, codecs - x264 and faac, tools - ffmpeg, mencoder. We do not like commercial software, if there are free alternatives.

At the moment I know about the following implementations of this technology:

I have been working in a company that has been involved in video technologies on the Internet for 10 years already and we have some experience and, looking at the documentation of the Adobe HTTP Dynamic Streaming protocols, we decided that for our “Tyuba” project it’s easier and faster for us to implement server support ourselves . And the client implementation of the protocol in OSMF is also open under the BSD license.

The result is the project OpenHttpStreamer, which we decided to put in the OpenSource under the LGPL.

Official page of the project - http://inventos.ru/OpenHttpStreamer

Sources are available on GitHub - https://github.com/inventos/OpenHttpStreamer .

We tried to build under Ubuntu 10.10, Fedora 12, Debian (Squeeze). Of the features - need scons , g ++ version 4.3.0 and higher and boost> = 1.39.

How to use:

If everything went well in / usr / local / bin / mp4frag there will be a compiled static fragment creation utility

We encode ffmpeg'om any video in two qualities - 400 and 700 kbit / s (approximately)

We got two mp4 files - test-q1.mp4 and test-q2.mp4, from which we generate static fragments:

The result of the work is a file descriptor (“manifest”) - test.f4m and static fragment files in folders 0 / and 1 /

We post test.f4m 0/1 / under DocRoot of any web server that can render statics, and it remains for us to write a simple player on a flash using OSMF as the engine, or take a ready player.

For a quick test, you can use our OSMF player assembly.

For this

1. under the DocRoot of our server (in the same place as test.f4m) we place the following crossdomain.xml:

2. go to the browser at the link http://inventos.ru/OpenHttpStreamer?url=http://your_http_host/test.f4m

As usual, if something does not work, we look at the logs of our server - is there any access from the player to crossdomain.xml, test.f4m, segment files. It is convenient to check if everything is correct - to “pull” the necessary addresses by yourself -

In conclusion, a few words about what is now in the repository. While written only static repacker - mp4frag. Module for nginx in development. We have already thought out the architecture and algorithms, we are actively programming and are going to release literally this week or next week - I hope I will write more about this.

We need the dynamic generation module to store the content in its original state (mp4 after converting ffmpeg) as it is a fairly universal format suitable for other purposes (streaming to other platforms). There is an interesting option - the use of fragmented mp4, but it is less versatile.

We came up with a simple way - index mp4 for quick access to the desired fragments and put the indices in a separate file next to the content itself. The size of the index will be only about 1% of the source files. Due to the fact that even getting quick access to the desired mdat fragment in the original mp4, we still need to remix the data by adding flv - headers (a feature of the OSMF implementation), we lose in performance compared to the statics. However, by proxying requests for this module through fast web-cache and caching responses, we achieve both high performance and high versatility.

Since the creation of the first "Tube" video technologies on the Internet have gone some way of development, they now allow to do much more, and the requirements for a modern video site have become somewhat different.

The most interesting recent trends, in our opinion, are:

- the ability to watch one video site from different devices,

- adaptive HTTP streaming technology

As a result of the recent emergence and rapid spread of mobile devices that are really convenient for the use of the Internet from them, as well as the development of wireless data transmission technologies, it becomes clear that you need to have a second version of the site optimized for mobile iOS, Android and other platforms. The third version of the site, optimized for the “10-foot interface”, will soon be needed when any WebTV technology, such as GoogleTV, becomes popular.

')

It all fits into the so-called. the concept of “three screens”, from which people will consume video (and generally Internet content) in the near future - a mobile phone (tablet) on the road, a PC at the office at work, TV in the living room at home.

I would like to talk about the technology of adaptive HTTP streaming in more detail, which is the subject of this article.

The Internet, by its nature, is poorly suited for video delivery, especially in real time. On the one hand, we want to see a smooth continuous stream of video; on the other hand, we have an unstable connection with characteristics floating in time and we can deal with it in two ways - by increasing the delay (buffer) or adapting the bitrate of the stream.

The main idea of adaptive multi-bit streaming technology lies precisely in stream adaptation — that we encode one video in several bitrates and stream the user to the bit rate that we consider possible at a given time, for example, based on the assessment of the current throughput of the user's network and evaluation decoding speeds (i.e. whether the user's computer is coping with decoding the stream in real time).

First of all, it is important for live broadcasts, when it is more important for the user to “keep up with the stream,” that is, minimizing the likelihood of “prebuffering”, even if at times sacrificing image quality. However, for video-on-demand services, this technology is also extremely useful - it’s nice when the video starts quickly, in the capacity that the channel allows and by all means tries not to stumble.

In practice, this means that we can significantly improve the perception of the service not only to users with an unstable / weak channel, somewhere deep in the regions, but also to users of wifi networks, users who have one connection common to several users in a household, and t .d For users with a dedicated quality channel, this technology will simply automatically determine the speed of its channel and will give the video in a suitable bitrate - i.e. the end user no longer needs to know what 360p, 240p or SD is, HD, etc. - everything happens automatically.

Pay for all these benefits accounted for 3 things -

- complication of the streaming procedure,

- additional coding costs,

- additional storage costs.

The first reason at the moment should no longer be taken into account, since there are already ready opensource - building blocks for the construction of such systems, which I will talk about later.

The second and third reasons, of course, are important, but here the developers of the site should themselves consider whether their users are worth these additional costs.

At the moment there are at least three implementations of adaptive multibitrate streaming technology known to me:

- Apple HTTP Adaptive Streaming for iPhone / iPad,

- Microsoft Smooth Streaming for Silverlight,

- Adobe Dynamic Streaming for Flash.

For the practice of developing video sites, the most important, of course, is the implementation from Adobe, since Apple HTTP Adaptive Streaming works only for iOS devices and Safari under MacOS X (although I was recently shown one STB in which this protocol was implemented), and Silverlight, let's say, until I received such popularity as Flash.

Adobe implemented Dynamic Streaming within the framework of the rtmp protocol for quite some time and only relatively recently (with the advent of flash 10.1) was it possible to use HTTP streaming. This is a very important step, since previously a specialized streaming server was needed to use dynamic streaming, but now most of the work has been transferred to the client, and server support is simplified to the point of returning normal HTTP statics.

In fact, we need to encode the video in several bitrates, and then either cut it into fragments, for example, 5 seconds each and put it under the fast statics return server (see the picture below), or if there is a server or streaming module, which is from mp4 files can cut the necessary fragments on the fly, then we put it on the storage level, and we route the requests through some effective cache. Moreover, this casher can be at the CDN service provider. One of the main advantages of the adaptive HTTP streaming technology is the ease of use of external CDNs and cachers - you can not care about the prefilling of the cache - in the case of “cache miss” the request is proxied to the origin-server, while being saved in the cache.

Caching is very effective, because in the proxy cache, the demanded content “slices” into pieces. Moreover, these pieces are relatively small and you can come up with different strategies for managing the cache - when to “save” a piece, when to delete it, etc. This gives more freedom to develop optimization mechanisms for developers of CDN services. At one time, for the first versions of the rutub delivery system, we developed a similar piecewise caching mechanism, which allowed the project to deliver video efficiently with a minimum of equipment for a long time.

From a programming point of view, for the support of HTTP Dynamic Streaming, the guys from Adobe did one simple thing - the appendBytes method appeared for the NetStream object. The format of the input array is byte stream in FLV format. In fact, this makes it possible to play byte stream, and the issues of its delivery is a separate task. For example, you can deliver these bytes via HTTP - pieces and you get HTTP streaming.

Systems from Apple and Microsoft implement the delivery and playback system inside their “black box”, and Adobe allows you to program the system yourself. They also made an open implementation of such a system as part of their Open Source Media Framework. OSMF is a set of base classes that simplify the writing of video players in ActionScript3, which, among other things, includes the implementation of HTTP dynamic streaming support. Moreover, the specifications of this protocol and details of the implementation of Adobe is trying to disclose. You can see here and here . Can you imagine such a thing earlier, when Flash had no competitors and their future seemed completely cloudless?

So, back to the formulation of the problem - we want to make a video hosting service, the desktop version will use flash and HTTP adaptive streaming technology.

How to build such a system for converting and delivering video?

We will encode in mp4, codecs - x264 and faac, tools - ffmpeg, mencoder. We do not like commercial software, if there are free alternatives.

At the moment I know about the following implementations of this technology:

- commercial software. Commercial servers from Adobe and Wowza have an implementation of this technology, OSMF can be taken as the basis for the player. The option is probably good, but expensive.

- there is a free but closed implementation from Adobe itself. It is a repacker of source files in a certain f4f-format and a module for apache that works with such repacked files. Minuses - it is impossible to work with mp4 files created by ffmpeg, since the source code is closed, understanding why is the problem.

- USP from CodeShop. Pluses are a wonderful open implementation of the server part, cons - the client is closed and, in fact, the software is commercial, albeit OpenSource. If a site that uses technology, commercial - shows advertising or charges for viewing, you need to buy a license.

I have been working in a company that has been involved in video technologies on the Internet for 10 years already and we have some experience and, looking at the documentation of the Adobe HTTP Dynamic Streaming protocols, we decided that for our “Tyuba” project it’s easier and faster for us to implement server support ourselves . And the client implementation of the protocol in OSMF is also open under the BSD license.

The result is the project OpenHttpStreamer, which we decided to put in the OpenSource under the LGPL.

Official page of the project - http://inventos.ru/OpenHttpStreamer

Sources are available on GitHub - https://github.com/inventos/OpenHttpStreamer .

We tried to build under Ubuntu 10.10, Fedora 12, Debian (Squeeze). Of the features - need scons , g ++ version 4.3.0 and higher and boost> = 1.39.

How to use:

$ git clone https://github.com/inventos/OpenHttpStreamer.git

$ cd OpenHttpStreamer/mp4frag

$ scons configure && scons

$ sudo scons install

If everything went well in / usr / local / bin / mp4frag there will be a compiled static fragment creation utility

$ mp4frag

Allowed options:

--help produce help message

--src arg source mp4 file name

--video_id arg (=some_video) video id for manifest file

--manifest arg (=manifest.f4m) manifest file name

--fragmentduration arg (=3000) single fragment duration, ms

--template make template files instead of full fragments

--nofragments make manifest only

We encode ffmpeg'om any video in two qualities - 400 and 700 kbit / s (approximately)

$ ffmpeg -y -i test.mpg -acodec libfaac -ac 2 -ab 96k -ar 44100 -vcodec libx264 -vpre medium -g 100 -keyint_min 50 -b 300k -bt 300k -threads 2 test-q1.mp4

$ ffmpeg -y -i test.mpg -acodec libfaac -ac 2 -ab 96k -ar 44100 -vcodec libx264 -vpre medium -g 100 -keyint_min 50 -b 600k -bt 600k -threads 2 test-q2.mp4

We got two mp4 files - test-q1.mp4 and test-q2.mp4, from which we generate static fragments:

$ mp4frag --src test-q1.mp4 --src test-q2.mp4 --manifest=test.f4m

The result of the work is a file descriptor (“manifest”) - test.f4m and static fragment files in folders 0 / and 1 /

We post test.f4m 0/1 / under DocRoot of any web server that can render statics, and it remains for us to write a simple player on a flash using OSMF as the engine, or take a ready player.

For a quick test, you can use our OSMF player assembly.

For this

1. under the DocRoot of our server (in the same place as test.f4m) we place the following crossdomain.xml:

<?xml version="1.0"?>

<!DOCTYPE cross-domain-policy SYSTEM "http://www.adobe.com/xml/dtds/cross-domain-policy.dtd">

<cross-domain-policy>

<allow-access-from domain="inventos.ru" />

</cross-domain-policy>

2. go to the browser at the link http://inventos.ru/OpenHttpStreamer?url=http://your_http_host/test.f4m

As usual, if something does not work, we look at the logs of our server - is there any access from the player to crossdomain.xml, test.f4m, segment files. It is convenient to check if everything is correct - to “pull” the necessary addresses by yourself -

wget -O - -S «http://your_http_host/test.f4m»

wget -O - -S «http://your_http_host/1/Seg1Frag1»

In conclusion, a few words about what is now in the repository. While written only static repacker - mp4frag. Module for nginx in development. We have already thought out the architecture and algorithms, we are actively programming and are going to release literally this week or next week - I hope I will write more about this.

We need the dynamic generation module to store the content in its original state (mp4 after converting ffmpeg) as it is a fairly universal format suitable for other purposes (streaming to other platforms). There is an interesting option - the use of fragmented mp4, but it is less versatile.

We came up with a simple way - index mp4 for quick access to the desired fragments and put the indices in a separate file next to the content itself. The size of the index will be only about 1% of the source files. Due to the fact that even getting quick access to the desired mdat fragment in the original mp4, we still need to remix the data by adding flv - headers (a feature of the OSMF implementation), we lose in performance compared to the statics. However, by proxying requests for this module through fast web-cache and caching responses, we achieve both high performance and high versatility.

Source: https://habr.com/ru/post/110135/

All Articles