Infrastructure Blekko: 800 servers, your crawler and Perl modules

The new search engine Blekko started working a month and a half ago and quite naturally attracted the close attention of experts. Not only thanks to the innovative interface and slash tags, but in principle, still today the launch of a new search engine in general profile is a rarity. Few dare to googling with google. Among other things, it requires considerable financial investments.

Let's see what the Blekko infrastructure is about, which Richard Skrent, CEO and CTO Greg Lindahl, told in detail about .

The Blekko data center has about 800 servers, each with 64 GB of RAM and eight SATA disks per terabyte. RAID backup system is not used at all, because RAID controllers greatly reduce performance (from 800 MB / s for eight drives to 300-350 MB / s).

To avoid data loss, developers use a completely decentralized architecture and a number of unusual tricks.

')

First, they developed “search modules” that simultaneously combine the functions of crawling, analysis, and search results. Due to this, their decentralization is preserved in their cluster of 800 servers. All servers are equal among themselves, there are no dedicated specialized clusters, for example, for crawling.

Servers in a decentralized network exchange data, so that at each moment in time a copy of information blocks is contained on three machines. As soon as the disk or server fails, the other servers immediately notice this and begin the process of "curing", that is, additional replication of data from the lost system. This approach, according to Skrenta, is more efficient than RAID.

If the disk fails, the engineer goes to the data center and changes it. With the number of disks about 6400 administrators on duty, probably, do not have much sleep.

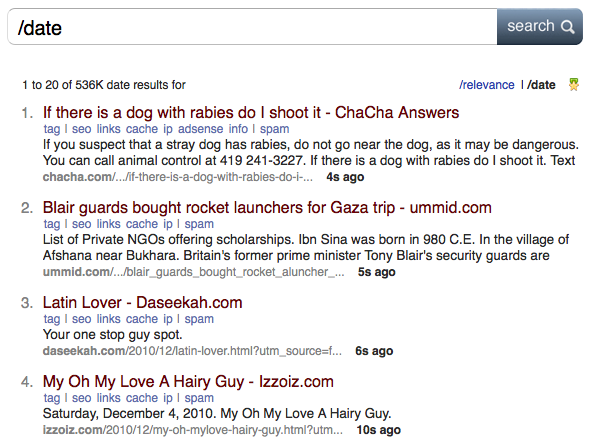

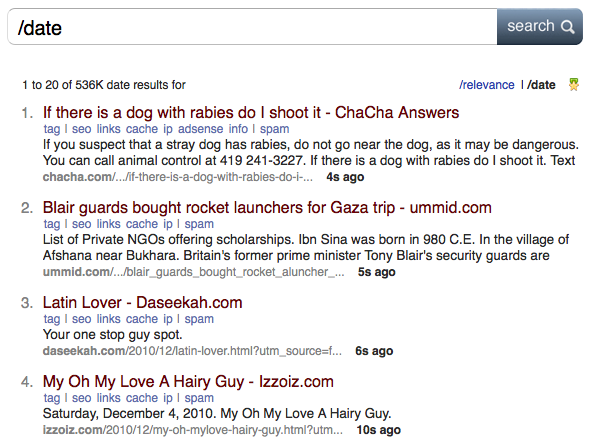

Servers index 200 million web pages per day, and in total there are already 3 billion documents in the index. The update frequency ranges from a few minutes for the main pages of popular news sites to 14 days. This parameter is clearly demonstrated in the search results: the slash tag / date shows which pages were indexed last and how many seconds ago.

You can refresh the page and watch the crawler. It is seen that the addition of new content in the issue occurs at intervals of a few seconds. Even google Caffeine does not provide such speed.

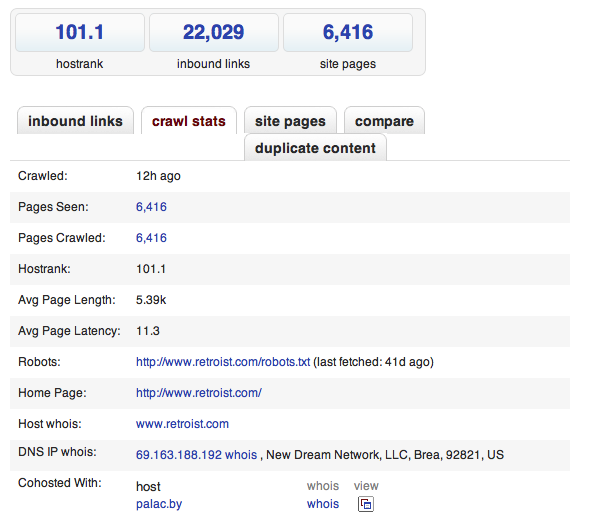

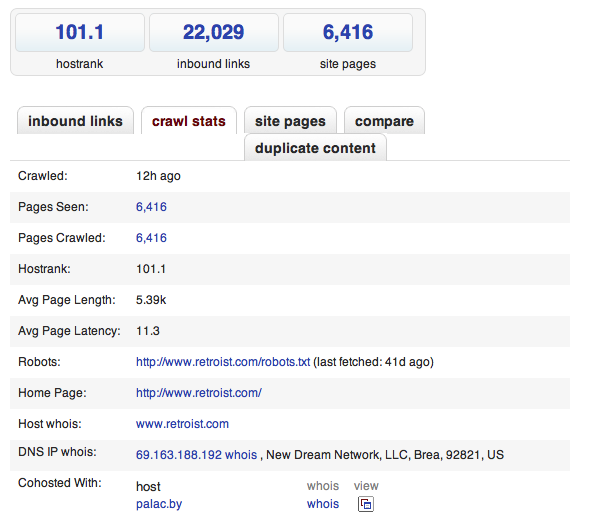

From a technical point of view, they managed to make such an implementation of MapReduce, which works in small iterations and provides an instant display of each iteration. This can be seen if refreshing SEO-page , which is attached to each search result.

The secret to the success of such an extraordinary solution is Perl. The developers say that they are extremely pleased with their choice, there are modules for every taste in the CPAN library, and more than 200 modules are installed on each machine. The servers are CentOS and since they are all the same, you can use the same distribution.

Let's see what the Blekko infrastructure is about, which Richard Skrent, CEO and CTO Greg Lindahl, told in detail about .

The Blekko data center has about 800 servers, each with 64 GB of RAM and eight SATA disks per terabyte. RAID backup system is not used at all, because RAID controllers greatly reduce performance (from 800 MB / s for eight drives to 300-350 MB / s).

To avoid data loss, developers use a completely decentralized architecture and a number of unusual tricks.

')

First, they developed “search modules” that simultaneously combine the functions of crawling, analysis, and search results. Due to this, their decentralization is preserved in their cluster of 800 servers. All servers are equal among themselves, there are no dedicated specialized clusters, for example, for crawling.

Servers in a decentralized network exchange data, so that at each moment in time a copy of information blocks is contained on three machines. As soon as the disk or server fails, the other servers immediately notice this and begin the process of "curing", that is, additional replication of data from the lost system. This approach, according to Skrenta, is more efficient than RAID.

If the disk fails, the engineer goes to the data center and changes it. With the number of disks about 6400 administrators on duty, probably, do not have much sleep.

Servers index 200 million web pages per day, and in total there are already 3 billion documents in the index. The update frequency ranges from a few minutes for the main pages of popular news sites to 14 days. This parameter is clearly demonstrated in the search results: the slash tag / date shows which pages were indexed last and how many seconds ago.

You can refresh the page and watch the crawler. It is seen that the addition of new content in the issue occurs at intervals of a few seconds. Even google Caffeine does not provide such speed.

From a technical point of view, they managed to make such an implementation of MapReduce, which works in small iterations and provides an instant display of each iteration. This can be seen if refreshing SEO-page , which is attached to each search result.

The secret to the success of such an extraordinary solution is Perl. The developers say that they are extremely pleased with their choice, there are modules for every taste in the CPAN library, and more than 200 modules are installed on each machine. The servers are CentOS and since they are all the same, you can use the same distribution.

Source: https://habr.com/ru/post/109949/

All Articles