Free Clouds from Amazon + Ruby on Rails. First impressions

Since November 2010, Amazon provides a minimum cloud hosting package for free (for a period of 1 year and only for new users). I have long been going to feel what it is, and the freebie was the last straw. I have never dealt with a whole dedicated server. I moved to Linux just a few months ago, due to my interest in Ruby on Rails, so I am a reference example of a curious teapot. I hope that this article will become an ideal entry point into the clouds for teapots like me.

Registering with Amazon Web Services is a completely trivial process. If you have a payment card for the Internet, you just need to fill out a form. If not, google a query like “Visa virtuon, e-card, internet card, banks,% name of your country%” and, after reading the issue, go to the bank. The closest thing to my house was the Pravex Bank branch, the conditions there are quite acceptable, although there is no Internet banking. Card service costs $ 3 per year, minimum balance is $ 5. They activated it three days later, after an unsuccessful attempt to tie it to an account, I had to call the bank's hotline to allow authorization without CVV2 . Five minutes later, I started my first server.

The first impression from AWS - too much of everything - forever can not figure it out! Dozens of FAQs, manuals, tabs, and new incomprehensible terms. Most of all, it was not the links that helped me jump, but the reading on the diagonal of this manual . Although there are 269 pages, but a lot can be missed the first time, so do not be intimidated.

The most productive metaphor for understanding what AWS is and how its parts are interconnected is the data center. The account control panel is best imagined not as a shared-hosting admin or shell of a dedicated server, but as software for automating a normal, non-cloud data center. That is, instead of calling the admin to reboot the server, install 3 more of the same, or upgrade the hardware, you press the buttons, and everything is done automatically in a few minutes instead of a few hours or days.

')

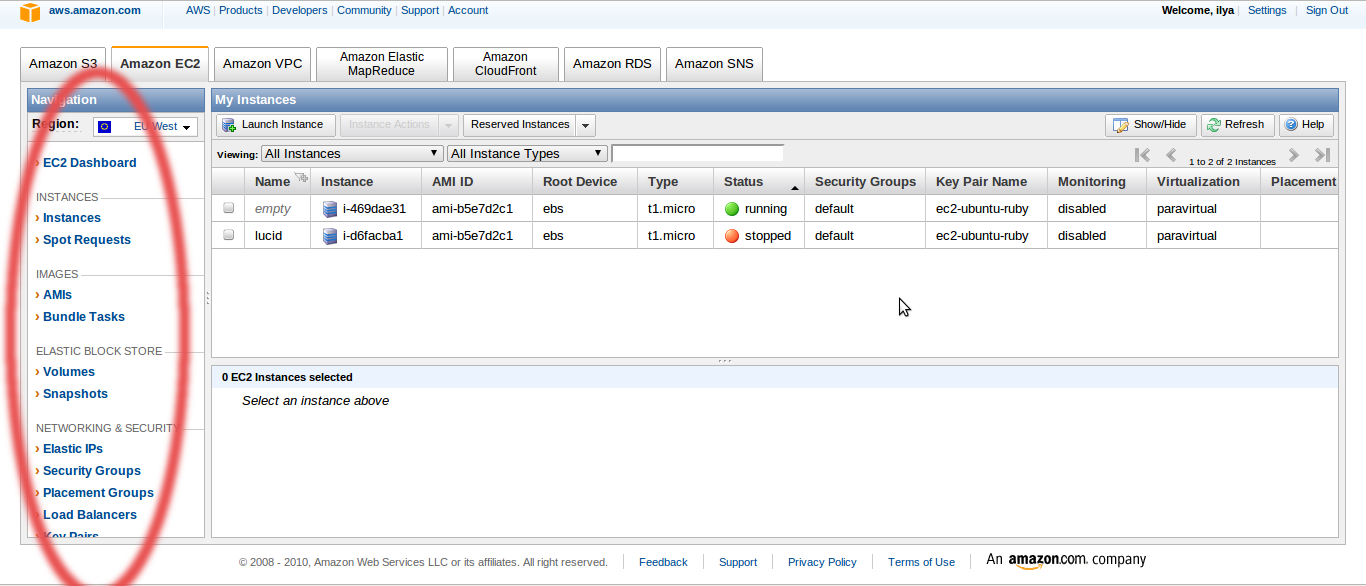

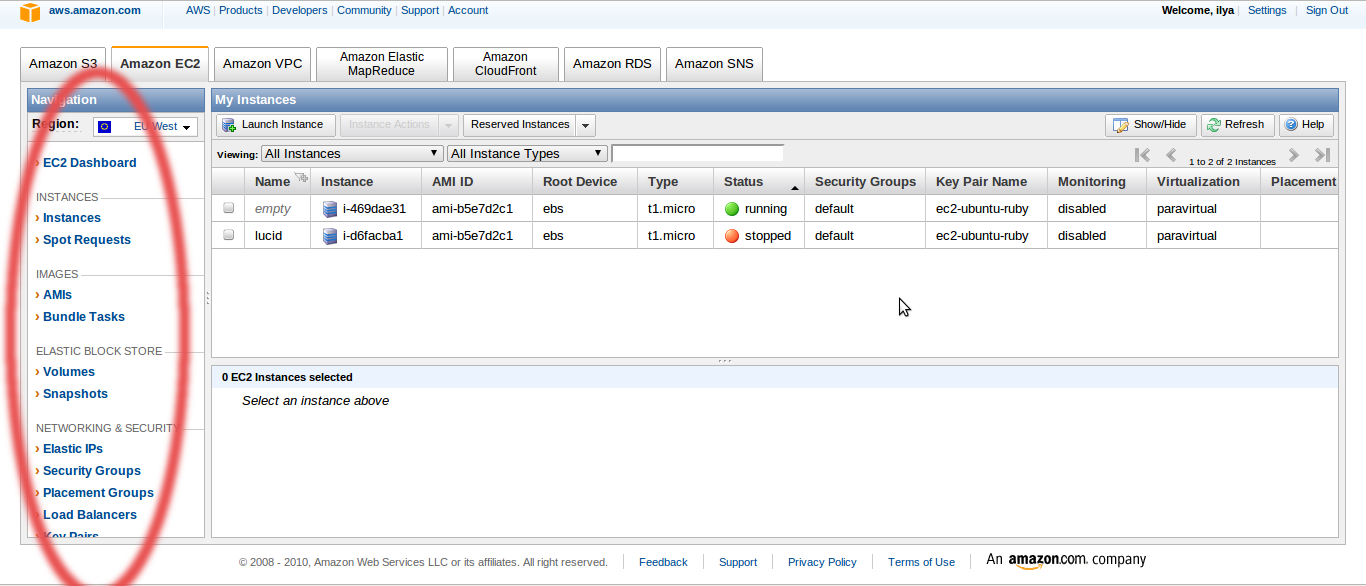

Instances is your server rack. AMIs are customized server images that can be installed in your datacenter. Volumes - screws that can be inserted into any of the servers. Snapshots - backup copies of screws. Security Groups - firewalls, each of which can hide one or more servers.

How does all this work? The central object of all this cloud abundance is Amazon Machine Image, or AMI. AMIs are of two types - S3-backed and EBS-backed. EBS-backed is an image of a virtual machine with a virtual screw - EBS volume . S3-backed is a machine without a screw, a heavy legacy of those times when Amazon did not have any EBS yet, and all files had to be stored on S3. The S3-backed server cannot be turned off (stop) in the evening, and next morning to turn on (start), it can only be destroyed (terminate), and if necessary, a new one (launch) can be created. All data that has not been saved on S3 is lost. I didn’t dig into it deeply, because it’s much easier to work with EBS-backed AMI, which from the user's point of view is no different from a real car, standing somewhere in the next room.

Amazon servers store hundreds of server images to suit all tastes, with virtually any server operating system and a bunch of pre-installed software options. I chose the official image of bare Ubuntu Server 10.04 . Technically, all these AMIs are XEN para - virtual machines. In principle, you can blind such an image from scratch, but for me the server is only a Ruby on Rails stand, so I didn’t waste my time, and so everything works fine. Before you start working, install the ec2-api-tools package on your local machine:

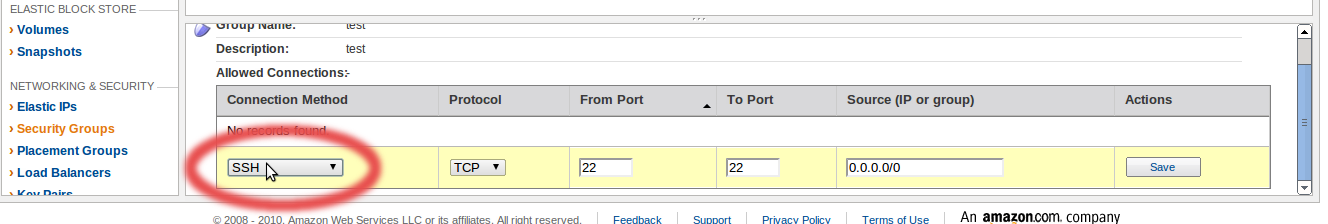

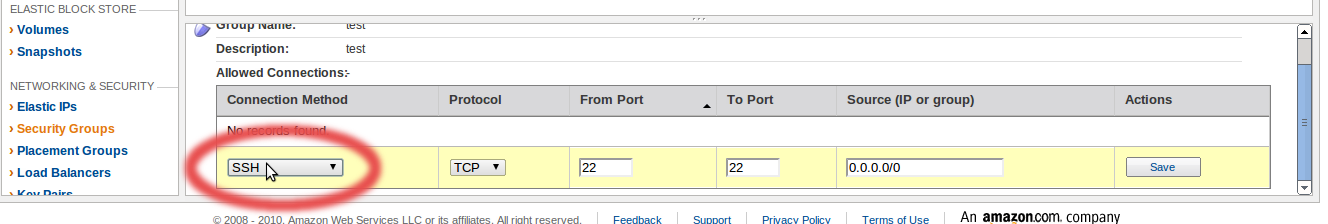

The server is managed via SSH, the user name is ubuntu, the external IP address and domain name are visible on the panel or in the ec2-describe-instances command output, the keys are most easily generated in the AWS Management Console and saved on the local machine (in the sense of , teapots are the easiest, and command line Jedi may be easier to get the keys locally and download to Amazon using the familiar chord. The Firewall (Security Group) by default closes everything at all, so before trying to connect, you need to open port22 . If you have a static IP, then for security, you can specify it in the Source (IP or group) field. To stop the machine when you exit the SSH session, simply write sudo poweroff, and it goes into stopped mode. If we want it to continue working, we write exit.

The first pitfall to which you immediately need to pay attention - the freebie package includes an EBS-volume of 10 GB, and standard Amazon AMIs have a15-gig screw onboard by default. At the same time, the bare system takes less than 1G. The Internet is full of articles on how to expand the EBS section, but not a single sensible article on how to reduce it. As a rule, it is proposed to rsync-nut the whole screw on a new smaller section, but after that the system failed to boot. I found the solution somewhere in the comments of a blog . And here I got on the headstock! The fact is that dd I used the first time in my life. Of course, I forgot to specify the block size (by default - only 512 bytes). In addition, I had the sense to understand that the system files + 9.3 gigabytes of zeros will be copied for a very long time, and I reduced the size of the file system to 1G. But to notify dd about this was no longer enough. And he doesn't care about file systems, and it stopped only when the 10-gig partition ended. 10 gigs in pieces of 512 bytes each is not a joke, but the free monthly I / O limit on EBS volumes is only 1,000,000 pieces. In addition, in a hurry when launching the second server, I forgot to change the default configuration of the standard AMI (m1.small, what it is - see below) to t1.micro, and this is also not free. Total: 22 cents per dd and 10 cents - per hour of work m1.small instance.

After these manipulations, I saved my own AMI version with a10-gig screw (one click on the instance context menu). When creating an AMI, a snapshot of the system is automatically taken. Actually, AMI is a snapshot, only registered as a virtual machine and having an identifier of the form ami-xxxxxxxx. Unlike EBS volumes, the payment for which is charged for the full volume regardless of how many files actually occupy, snapshot s are stored compressed and made incrementally. So they take up very little space on S3. From one AMI, you can run several instances, each of them will have its own screw, so to keep the application data, for example, the MySQL database, you will immediately need a separate server, but it will already cost money, so for now you can put MySQL on the same machine as the web server. In the case of a dedicated database server, you can not even bother with the setting, the Amazon has ready . Another important point. When a machine is created, an EBS volume is created, and when it is destroyed (it is terminate, not stop!) It defaults by default. It is logical - they threw the server out of the rack, the screw also went away. After all, for every EBS volume, money drips, regardless of whether the machine is turned on or not. But if you wish, you can get the screw out of the server and keep it .

Now more detail about what these m1.small and t1.micro. Here is a list of all available configurations. T1.micro has one maaaaaa lanky nuance. Unlike all other types, micro processor resources are variable. While he is not touched, this is twice as much as m1.small, but under the load he quickly gets tired and falls below the baseboard. That is, you should not abuse cron-ohm, convert video or try to keep projects with a peak load of more than a few dozen requests per minute on it - it will choke. It feels like it’s smartly working for the first few seconds — more than enough to process any request to the site, or even several requests at once, but the Ruby interpreter was very, very long to compile. Small bags like Midnight Commander or small gems are put at lightning speed, the web server runs three times faster than on my ThinkPad X100e. In my opinion - quite a reasonable compromise. Here, people drove tests, they write that although it slows down, but for money, micro-instances are one and a half times steeper than small-instance.

Having dealt with the server hardware and the system, go to Ruby on Rails. I somehow didn’t have a relationship with RVM - at first I couldn’t deliver it, then some gems were buggy, so I installed Ruby 1.9.2-p0 from the sources on my local machine. I did the same here. At the same time, my hands finally reached the heap of everything needed to install Rails3 + Ruby 1.9.2 + MySQL on Ubuntu 10.04:

I got the idea that I would have a real production-server, and prepared to install some Apach, Nginx or Lighttpd, followed by the Mongrel cluster, as written in the clever Rails books. Not WEBrick, in fact, to drive on a real-present production-server! We'll have to suffer. But Phusion Passenger is, as it turned out, not just a passenger who rides Apache and others. In standalone mode, it includes nginx. Actually, sudo gem install passenger is all you need to know. He himself finds where the application is, and runs it without any tinkering in the configs. The user manual simply touches with its conciseness. DTSTTCPW, however!

What is the result? Now I am gradually moving from the lame typing on the link in the Amazon web-face to ec2-api-tools. There is basically nothing complicated here - ec2-describe / run / start / stop / terminate-instances, ec2-describe / create / delete-volumes, etc. The instructions are all sensibly described. The Amazon price calculator shows that a free package would cost about 20 bucks a month if it were paid. Own dedicated server, plus the ability to experiment with a datacenter with two dozen servers, firewalls, load balancers and other goodies for a pittance. The ability to raise any operating system available in the form of a public AMI in a couple of minutes and feel it alive. The ability to raise and drive a cluster with dozens of cores and hundreds of gigabytes of memory in just a few minutes is just a few dollars per hour. In short, I am satisfied!

upd: When I created AMI from snapshot, I had such a glitch: the instance seemed to start up, but there was no connection. At the end of the system log there were several lines of this type:

It turns out that when creating an AMI, you must explicitly specify the kernel, something like this:

How do I know which kernel image I need? Start the original, obviously working public instance, on the basis of which you built your server, and see the kernel version in the output of the ec2-describe-instances command

Registering with Amazon Web Services is a completely trivial process. If you have a payment card for the Internet, you just need to fill out a form. If not, google a query like “Visa virtuon, e-card, internet card, banks,% name of your country%” and, after reading the issue, go to the bank. The closest thing to my house was the Pravex Bank branch, the conditions there are quite acceptable, although there is no Internet banking. Card service costs $ 3 per year, minimum balance is $ 5. They activated it three days later, after an unsuccessful attempt to tie it to an account, I had to call the bank's hotline to allow authorization without CVV2 . Five minutes later, I started my first server.

The first impression from AWS - too much of everything - forever can not figure it out! Dozens of FAQs, manuals, tabs, and new incomprehensible terms. Most of all, it was not the links that helped me jump, but the reading on the diagonal of this manual . Although there are 269 pages, but a lot can be missed the first time, so do not be intimidated.

The most productive metaphor for understanding what AWS is and how its parts are interconnected is the data center. The account control panel is best imagined not as a shared-hosting admin or shell of a dedicated server, but as software for automating a normal, non-cloud data center. That is, instead of calling the admin to reboot the server, install 3 more of the same, or upgrade the hardware, you press the buttons, and everything is done automatically in a few minutes instead of a few hours or days.

')

Instances is your server rack. AMIs are customized server images that can be installed in your datacenter. Volumes - screws that can be inserted into any of the servers. Snapshots - backup copies of screws. Security Groups - firewalls, each of which can hide one or more servers.

How does all this work? The central object of all this cloud abundance is Amazon Machine Image, or AMI. AMIs are of two types - S3-backed and EBS-backed. EBS-backed is an image of a virtual machine with a virtual screw - EBS volume . S3-backed is a machine without a screw, a heavy legacy of those times when Amazon did not have any EBS yet, and all files had to be stored on S3. The S3-backed server cannot be turned off (stop) in the evening, and next morning to turn on (start), it can only be destroyed (terminate), and if necessary, a new one (launch) can be created. All data that has not been saved on S3 is lost. I didn’t dig into it deeply, because it’s much easier to work with EBS-backed AMI, which from the user's point of view is no different from a real car, standing somewhere in the next room.

Amazon servers store hundreds of server images to suit all tastes, with virtually any server operating system and a bunch of pre-installed software options. I chose the official image of bare Ubuntu Server 10.04 . Technically, all these AMIs are XEN para - virtual machines. In principle, you can blind such an image from scratch, but for me the server is only a Ruby on Rails stand, so I didn’t waste my time, and so everything works fine. Before you start working, install the ec2-api-tools package on your local machine:

sudo apt-get install ec2-api-tools .The server is managed via SSH, the user name is ubuntu, the external IP address and domain name are visible on the panel or in the ec2-describe-instances command output, the keys are most easily generated in the AWS Management Console and saved on the local machine (in the sense of , teapots are the easiest, and command line Jedi may be easier to get the keys locally and download to Amazon using the familiar chord. The Firewall (Security Group) by default closes everything at all, so before trying to connect, you need to open port

The first pitfall to which you immediately need to pay attention - the freebie package includes an EBS-volume of 10 GB, and standard Amazon AMIs have a

After these manipulations, I saved my own AMI version with a

Now more detail about what these m1.small and t1.micro. Here is a list of all available configurations. T1.micro has one maaaaaa lanky nuance. Unlike all other types, micro processor resources are variable. While he is not touched, this is twice as much as m1.small, but under the load he quickly gets tired and falls below the baseboard. That is, you should not abuse cron-ohm, convert video or try to keep projects with a peak load of more than a few dozen requests per minute on it - it will choke. It feels like it’s smartly working for the first few seconds — more than enough to process any request to the site, or even several requests at once, but the Ruby interpreter was very, very long to compile. Small bags like Midnight Commander or small gems are put at lightning speed, the web server runs three times faster than on my ThinkPad X100e. In my opinion - quite a reasonable compromise. Here, people drove tests, they write that although it slows down, but for money, micro-instances are one and a half times steeper than small-instance.

Having dealt with the server hardware and the system, go to Ruby on Rails. I somehow didn’t have a relationship with RVM - at first I couldn’t deliver it, then some gems were buggy, so I installed Ruby 1.9.2-p0 from the sources on my local machine. I did the same here. At the same time, my hands finally reached the heap of everything needed to install Rails3 + Ruby 1.9.2 + MySQL on Ubuntu 10.04:

sudo apt-get install libxml2 libxml2-dev libxslt1-dev gcc g++ build-essential libssl-dev libreadline5-dev zlib1g-dev linux-headers-generic libsqlite3-dev mysql-server libmysqlclient-dev libmysql-ruby

wget ftp.ruby-lang.org//pub/ruby/1.9/ruby-1.9.2-p0.tar.gz

tar -xvzf ruby-1.9.2-p0.tar.gz

cd ruby-1.9.2-p0/

./configure —prefix=/usr/local/ruby

make && sudo make install

export PATH=$PATH:/usr/local/ruby/bin # .bashrc

sudo ln -s /usr/local/ruby/bin/ruby /usr/local/bin/ruby

sudo ln -s /usr/local/ruby/bin/gem /usr/bin/gem

echo «gem: —no-ri —no-rdoc» > $HOME/.gemrc

sudo gem install tzinfo builder memcache-client rack rack-test erubis mail text-format bundler thor i18n sqlite3-ruby mysql2 rack-mount rails

ruby -v

rails -vI got the idea that I would have a real production-server, and prepared to install some Apach, Nginx or Lighttpd, followed by the Mongrel cluster, as written in the clever Rails books. Not WEBrick, in fact, to drive on a real-present production-server! We'll have to suffer. But Phusion Passenger is, as it turned out, not just a passenger who rides Apache and others. In standalone mode, it includes nginx. Actually, sudo gem install passenger is all you need to know. He himself finds where the application is, and runs it without any tinkering in the configs. The user manual simply touches with its conciseness. DTSTTCPW, however!

What is the result? Now I am gradually moving from the lame typing on the link in the Amazon web-face to ec2-api-tools. There is basically nothing complicated here - ec2-describe / run / start / stop / terminate-instances, ec2-describe / create / delete-volumes, etc. The instructions are all sensibly described. The Amazon price calculator shows that a free package would cost about 20 bucks a month if it were paid. Own dedicated server, plus the ability to experiment with a datacenter with two dozen servers, firewalls, load balancers and other goodies for a pittance. The ability to raise any operating system available in the form of a public AMI in a couple of minutes and feel it alive. The ability to raise and drive a cluster with dozens of cores and hundreds of gigabytes of memory in just a few minutes is just a few dollars per hour. In short, I am satisfied!

upd: When I created AMI from snapshot, I had such a glitch: the instance seemed to start up, but there was no connection. At the end of the system log there were several lines of this type:

modprobe: FATAL: Could not load /lib/modules/2.6.16-xenU/modules.dep: No such fileIt turns out that when creating an AMI, you must explicitly specify the kernel, something like this:

ec2-register -n image_name -d image_description --root-device-name /dev/sda1 -b /dev/sda1=snap-XXXXXXXX::false --kernel aki-XXXXXXXXHow do I know which kernel image I need? Start the original, obviously working public instance, on the basis of which you built your server, and see the kernel version in the output of the ec2-describe-instances command

Source: https://habr.com/ru/post/109045/

All Articles