Auto-increment primary keys (surrogate keys) = evil?

In this article, I will give a look (negative for the most part) by Josh Burkus, CEO of PostgreSQL Experts Inc. to use surrogate keys for database tables, the very INT NOT NULL AUTO_INCREMENT PRIMARY KEY to which we are used. In fact, it will be a free, heavily abridged translation of his article on ittoolbox .

The article will be followed by analysis of my own mistakes on this topic, made in one old project. I was young and stupid, but that does not excuse me.

Honestly, after reading this article and not noticing who the author is, I thought that he was still exaggerating and, in general, I would somehow figure out without him where and what keys I should use. Then I thought a little more and climbed over the dump of the structure of the base of my old project. It was interesting.

')

If you are an experienced DBA, you probably should pass by so you don’t get upset.

But first things first. First VERY abbreviated translation:

“Surrogate numeric keys fell into the SQL89 standard for storing with old applications that required line numbers. Subsequently, in a conversation with Joe Selco , Codd said he regretted that he had allowed it.

Inexperienced developers, not realizing that using surrogate keys is a pragmatic compromise with performance considerations, use them everywhere. Even the authors of books on databases advise you to create them in all tables in any case.

In the theory of relational databases there is no concept of primary keys. All database keys have the same significance. The concept of a primary key is based on the idea that one and only one key determines the order of tuples on a disk, and the relational theory tells us that this is what we should ignore in the logical model of our data. So, primary keys in general are a violation of the relational theory.

I'm not saying that surrogate keys cannot be used at all, I am talking about the fact that they cannot be abused.

What reasons might encourage us to use surrogate keys?

Compromise with multicolumn keys . Usually, pretty convincing. The syntax of SQL queries using multi-column keys and the connection mechanism currently leaves much to be desired, as well as the performance of queries of this kind. As soon as these problems are resolved, this reason will disappear.

The data does not have a real key . Very bad reason. Its appearance illustrates how bad the design of the database as a whole is, and the fact that the developer does not really understand the data with which he works.

External requirements . Usually convincing. As a rule, development environments and database tools support only surrogate keys. And if you think that this tool is indispensable in the problem that you decide, well ...

Data consistency . Usually convincing. But only if you really scrupulously follow the plan and your entire design is carefully planned.

Following the SQL standard and good design principles . Very bad reason. It is completely based on ignorance. Usually, it is followed because somewhere you heard someone read on a blog someone who studies at UNIVERSITY that using surrogate keys is a standard in the industry. Keep in mind that neither modern SQL standards, nor the relational theory itself even contains references to surrogate keys.

The possibility of easy change . It is not clear. Indeed, some DBMS do not know how to perform ON UPDATE CASCADE or do it too inefficiently (by the way, think of it as the reason for changing the DBMS). And in this case, this reason may be weighty. However, sometimes developers say that the keys [primary] for recording should not change and should remain the same throughout the entire life cycle of a recording. Keep in mind that this statement is not worth a jigger and, of course, is completely absent in relational theory.

Performance . Usually a bad reason. Yes, indeed, there may be situations in which the use of natural keys greatly slows down the operation of the system compared to surrogate ones. But in 80% of cases the real tests are not supported by real tests, and such an assertion remains unfounded. Pre-optimization is the root of many ills in database design.

For mega-volume databases, the resulting table size can also be important. But for this the base should be very large.

The performance of joins or sorting also matters on a large amount of data, depending on the type of primary key and the number of its components. However, my experience shows that when they call this reason, real calculations or performance measurements very rarely stand behind it. For example, www.bricolage.cc has been using 14-byte numeric primary keys for its tables for many years. However, in this case, after the appearance of a user with a three million record in history, when the question arose of changing primary keys for the sake of performance, this problem was solved by rewriting queries. An approximately 10-fold increase in productivity was achieved.

Please note that problems are caused not by the use of surrogate keys, but by their abuse. ”

The end of my VERY REDUCED translation. The original is here (Primary Keyvil is called): it.toolbox.com/home/search.aspx?r=%22Primary+kevill%22&community=1&contentType=5

If I miss something important in translation, please tell me about it. I will add.

Yet the article seemed to me a bit dramatic problem. It seems to me that surrogate keys are chosen more and more often precisely because of the fact that to avoid performance problems later and lately everyone is so used to them that they are implanted at the level of the DBMS itself. For example, InnoDB, if you do not create a primary key, simply create it yourself. By the way, in the case of InnoDB, the choice of a primary key has serious consequences from the point of view of performance, since clustering is performed on it (accordingly, choosing a natural key can both improve and worsen the situation).

Despite the fact that the article sounds as though the surrogate keys are the essence of evil, the author stresses several times that the problem is not their use, but their abuse.

This article opened my eyes in the sense that I had always found it natural not to look for specific candidates for primary keys, but simply to create an INT NOT NULL AUTO_INCREMENT PRIMARY KEY field and sit still. Of course, I knew that you could choose any unique key as the primary key, but I never focused on it. I never really thought about what really makes a given database row unique and why it matters. As it turned out, nothing.

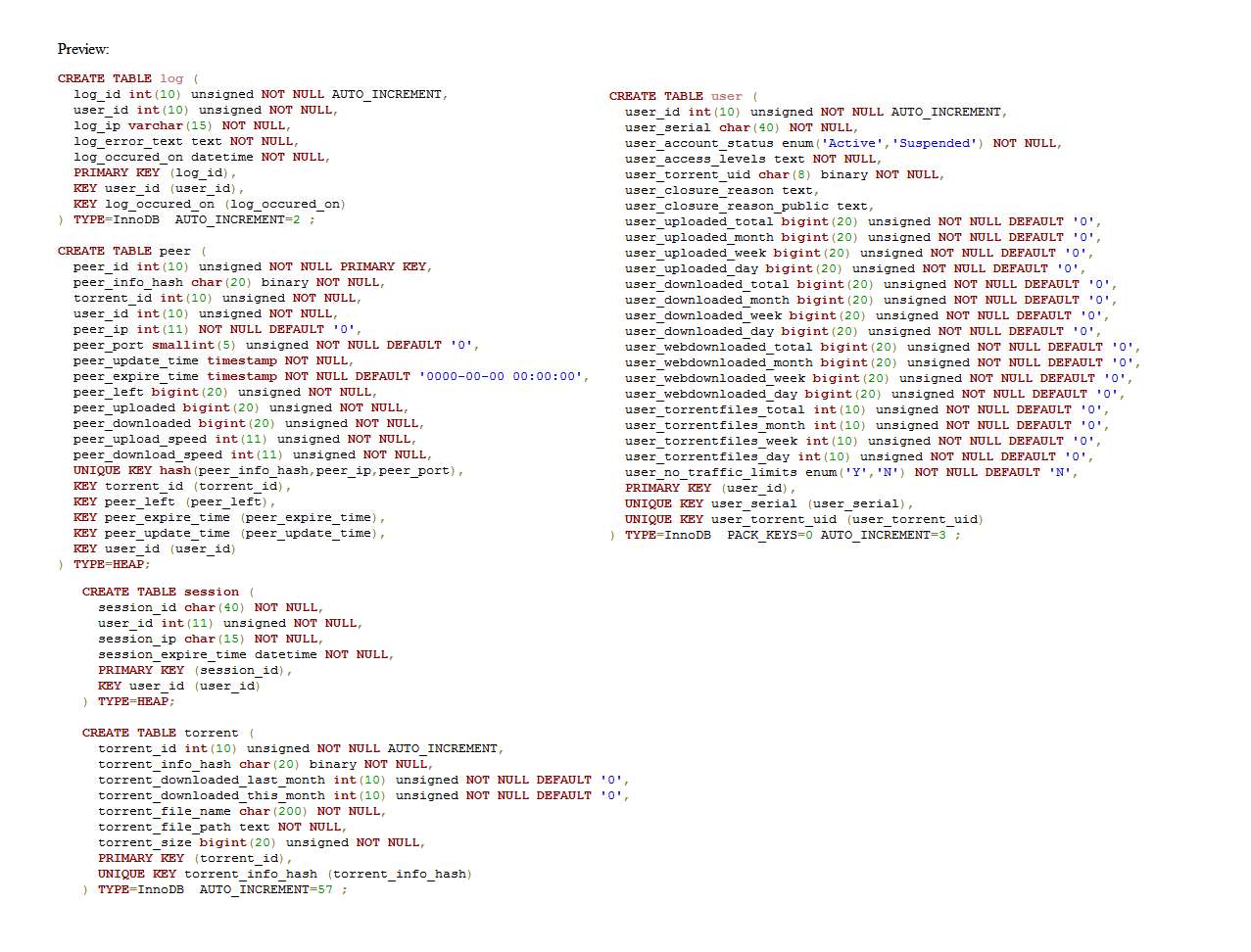

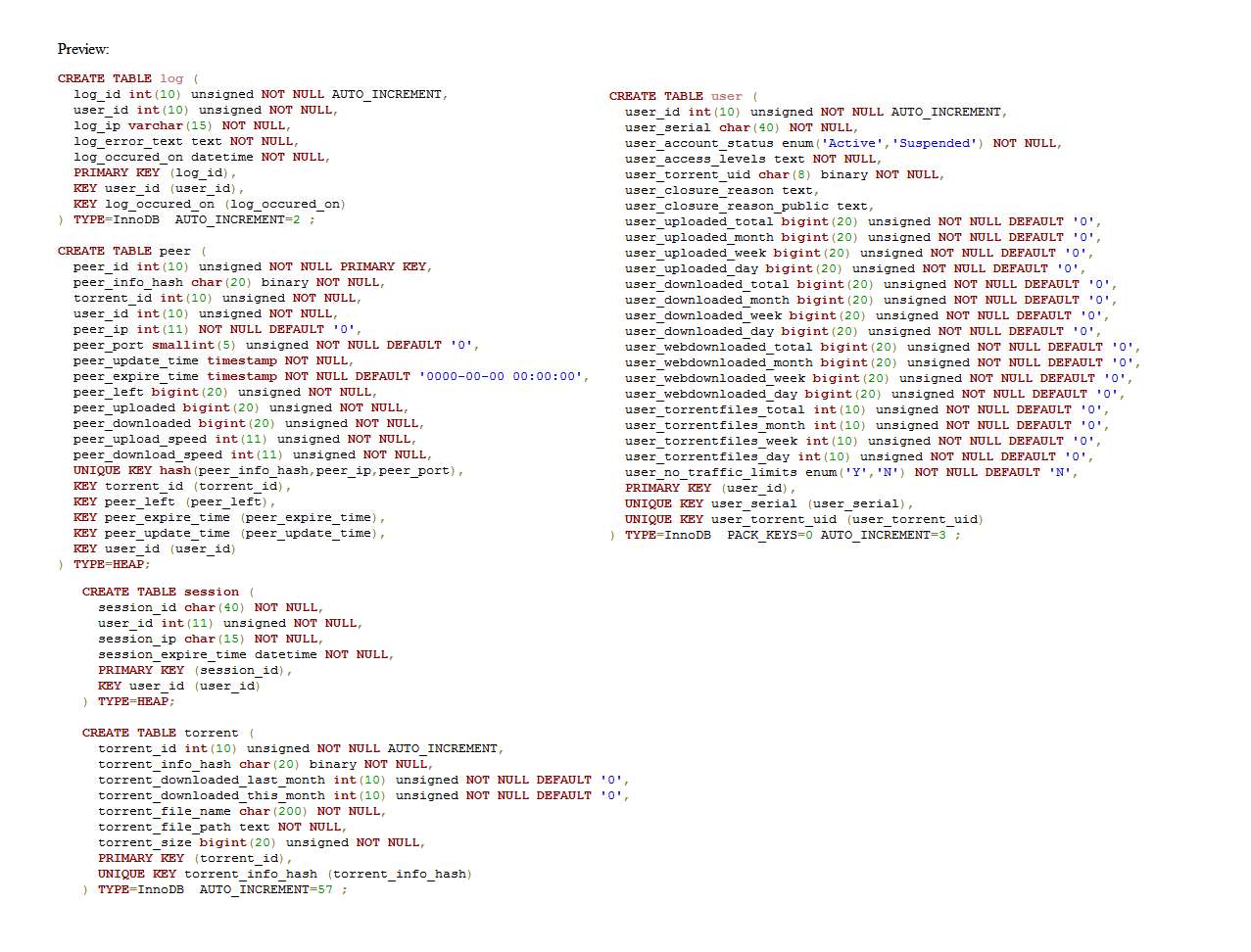

As an example, I want to give you my little old project. There are only a few tables. At first I wanted to choose something bigger, but I think that this is unnecessary. Only in vain will take your time. Let everyone open their own old project and look at it from the point of view of the position described. I actually added one mistake there now for the sake of justice. I would have done it anyway. I was saved only by chance.

The project is some kind of closed torrent tracker. I ask you not to pay attention now to problems with normalization and all the others. If I wrote it now, maybe something I would have done differently. Let's focus on surrogate keys.

pastebin.com/LstH8Xfx

The first table I would like to talk about is the log table. Generally, it was this case that dumbfounded me a little bit, because I suddenly saw an error. Very small, not worth much attention, but, nevertheless, this is a mistake that I have not noticed for many, many years. Did not notice at all. Take a break from the text now and return to the structure of this table. See you I did not see.

This table contains simple information. IP, user ID, the date of the event and its text. Yes, of course, the text could be replaced with code and a lot more could be done, but this is not about that now. After reading the article, I looked at this table and thought that, so I created a surrogate key. But what is the real data key? What makes a particular table row unique?

The answer is very simple. The combination of user ID and the time the event occurred. And here I suddenly saw the situation from the other side. In almost all my old projects, the DATETIME field is used to store time in logs. Just because it is convenient. Yes, I knew that it was stored up to a second and it suited me completely. Now, when I began to look for natural keys, it suddenly occurred to me what consequences this would have. The torrent tracker in question is heavily loaded and a lot can happen within a second. In fact, if there are several events with the same time in the log with this damn surrogate key and they happened one after another very quickly, I can tell which of them happened first, and which last one only guided by the surrogate key autoincrement. The date information field itself, which was created precisely to report such things, will not help me in any way. And I cannot exactly figure out the interval between events at all.

In general, this, of course, does not matter. The probability that I will need to figure out the interval between two events, which in any case is less than a second, is very small. But I always consider all my projects, both old and new, as educational. The project could be a little different, and it could become important.

I want to say that the consideration of the problem from the point of view of the search for a natural key is a somewhat different look. Try to look at the design of your project in this way and see what is revealed.

It seems that my explanation turned out to be muddled. I hope, nevertheless, I managed to convey to you my thought.

Now the table is peer. She already has a unique key that simply asks for the primary role. Many hundreds of inserts / deletes per second are made to the peer table and it’s just expensive to keep an extra index in the form of a primary key. So I eliminated it.

Session table For some reason, I did not rely on PHP sessions completely, but partially implemented my own. The random key is used as the primary key of this table. Not only is it just silly to use 40-character random sequences, so it’s not really necessary here at all. What acts as a natural key for entries in this table? In this project, the user was not allowed to be logged in from several computers at the same time. Um user_id? Everything else in relation to this value is secondary. I will not analyze now what follows from this simple statement. A lot of things up to the removal of the session table and implementation of another mechanism. There are many options here.

We turn to the table torrent.

A small digression for you to imagine the subject of discussion. The torrent tracker, which I developed, was at the same time the first file for the files that were distributed. The torrent table kept information about the files that were stored. These files were in the server file system, the corresponding .torrent files were created for them according to the scheme one file = one torrent, which were downloaded by users. Each torrent has a so-called info_hash, which uniquely identifies it .

This field in the peer table is called peer_info_hash. And in the torrent table this field is torrent_info_hash. torrent_id is superfluous there. Totally. Please note that the peer torrent_id table is also there. It is not clear why.

Well, the user table. It would seem that here I just could not make mistakes. Was wrong.

In the authorization system, torrent trackers use the GET parameter with a unique value for the user. In the table, this value is user_torrent_uid. Here ask me, who prevented to use this value as a natural key in one way or another? Yes, it can change. In a very rare case. So what? If 8 bytes is too long, you could take the usual random INT and convert it to text, like smart people do on Flickr. It was possible ... Yes, a lot of things could be.

Like this. Everything is obvious, isn't it? :)

The article will be followed by analysis of my own mistakes on this topic, made in one old project. I was young and stupid, but that does not excuse me.

Honestly, after reading this article and not noticing who the author is, I thought that he was still exaggerating and, in general, I would somehow figure out without him where and what keys I should use. Then I thought a little more and climbed over the dump of the structure of the base of my old project. It was interesting.

')

If you are an experienced DBA, you probably should pass by so you don’t get upset.

But first things first. First VERY abbreviated translation:

“Surrogate numeric keys fell into the SQL89 standard for storing with old applications that required line numbers. Subsequently, in a conversation with Joe Selco , Codd said he regretted that he had allowed it.

Inexperienced developers, not realizing that using surrogate keys is a pragmatic compromise with performance considerations, use them everywhere. Even the authors of books on databases advise you to create them in all tables in any case.

In the theory of relational databases there is no concept of primary keys. All database keys have the same significance. The concept of a primary key is based on the idea that one and only one key determines the order of tuples on a disk, and the relational theory tells us that this is what we should ignore in the logical model of our data. So, primary keys in general are a violation of the relational theory.

I'm not saying that surrogate keys cannot be used at all, I am talking about the fact that they cannot be abused.

What reasons might encourage us to use surrogate keys?

Compromise with multicolumn keys . Usually, pretty convincing. The syntax of SQL queries using multi-column keys and the connection mechanism currently leaves much to be desired, as well as the performance of queries of this kind. As soon as these problems are resolved, this reason will disappear.

The data does not have a real key . Very bad reason. Its appearance illustrates how bad the design of the database as a whole is, and the fact that the developer does not really understand the data with which he works.

External requirements . Usually convincing. As a rule, development environments and database tools support only surrogate keys. And if you think that this tool is indispensable in the problem that you decide, well ...

Data consistency . Usually convincing. But only if you really scrupulously follow the plan and your entire design is carefully planned.

Following the SQL standard and good design principles . Very bad reason. It is completely based on ignorance. Usually, it is followed because somewhere you heard someone read on a blog someone who studies at UNIVERSITY that using surrogate keys is a standard in the industry. Keep in mind that neither modern SQL standards, nor the relational theory itself even contains references to surrogate keys.

The possibility of easy change . It is not clear. Indeed, some DBMS do not know how to perform ON UPDATE CASCADE or do it too inefficiently (by the way, think of it as the reason for changing the DBMS). And in this case, this reason may be weighty. However, sometimes developers say that the keys [primary] for recording should not change and should remain the same throughout the entire life cycle of a recording. Keep in mind that this statement is not worth a jigger and, of course, is completely absent in relational theory.

Performance . Usually a bad reason. Yes, indeed, there may be situations in which the use of natural keys greatly slows down the operation of the system compared to surrogate ones. But in 80% of cases the real tests are not supported by real tests, and such an assertion remains unfounded. Pre-optimization is the root of many ills in database design.

For mega-volume databases, the resulting table size can also be important. But for this the base should be very large.

The performance of joins or sorting also matters on a large amount of data, depending on the type of primary key and the number of its components. However, my experience shows that when they call this reason, real calculations or performance measurements very rarely stand behind it. For example, www.bricolage.cc has been using 14-byte numeric primary keys for its tables for many years. However, in this case, after the appearance of a user with a three million record in history, when the question arose of changing primary keys for the sake of performance, this problem was solved by rewriting queries. An approximately 10-fold increase in productivity was achieved.

Please note that problems are caused not by the use of surrogate keys, but by their abuse. ”

The end of my VERY REDUCED translation. The original is here (Primary Keyvil is called): it.toolbox.com/home/search.aspx?r=%22Primary+kevill%22&community=1&contentType=5

If I miss something important in translation, please tell me about it. I will add.

Now a little about what I myself think.

Yet the article seemed to me a bit dramatic problem. It seems to me that surrogate keys are chosen more and more often precisely because of the fact that to avoid performance problems later and lately everyone is so used to them that they are implanted at the level of the DBMS itself. For example, InnoDB, if you do not create a primary key, simply create it yourself. By the way, in the case of InnoDB, the choice of a primary key has serious consequences from the point of view of performance, since clustering is performed on it (accordingly, choosing a natural key can both improve and worsen the situation).

Despite the fact that the article sounds as though the surrogate keys are the essence of evil, the author stresses several times that the problem is not their use, but their abuse.

This article opened my eyes in the sense that I had always found it natural not to look for specific candidates for primary keys, but simply to create an INT NOT NULL AUTO_INCREMENT PRIMARY KEY field and sit still. Of course, I knew that you could choose any unique key as the primary key, but I never focused on it. I never really thought about what really makes a given database row unique and why it matters. As it turned out, nothing.

As an example, I want to give you my little old project. There are only a few tables. At first I wanted to choose something bigger, but I think that this is unnecessary. Only in vain will take your time. Let everyone open their own old project and look at it from the point of view of the position described. I actually added one mistake there now for the sake of justice. I would have done it anyway. I was saved only by chance.

The project is some kind of closed torrent tracker. I ask you not to pay attention now to problems with normalization and all the others. If I wrote it now, maybe something I would have done differently. Let's focus on surrogate keys.

Database structure

pastebin.com/LstH8Xfx

The first table I would like to talk about is the log table. Generally, it was this case that dumbfounded me a little bit, because I suddenly saw an error. Very small, not worth much attention, but, nevertheless, this is a mistake that I have not noticed for many, many years. Did not notice at all. Take a break from the text now and return to the structure of this table. See you I did not see.

This table contains simple information. IP, user ID, the date of the event and its text. Yes, of course, the text could be replaced with code and a lot more could be done, but this is not about that now. After reading the article, I looked at this table and thought that, so I created a surrogate key. But what is the real data key? What makes a particular table row unique?

The answer is very simple. The combination of user ID and the time the event occurred. And here I suddenly saw the situation from the other side. In almost all my old projects, the DATETIME field is used to store time in logs. Just because it is convenient. Yes, I knew that it was stored up to a second and it suited me completely. Now, when I began to look for natural keys, it suddenly occurred to me what consequences this would have. The torrent tracker in question is heavily loaded and a lot can happen within a second. In fact, if there are several events with the same time in the log with this damn surrogate key and they happened one after another very quickly, I can tell which of them happened first, and which last one only guided by the surrogate key autoincrement. The date information field itself, which was created precisely to report such things, will not help me in any way. And I cannot exactly figure out the interval between events at all.

In general, this, of course, does not matter. The probability that I will need to figure out the interval between two events, which in any case is less than a second, is very small. But I always consider all my projects, both old and new, as educational. The project could be a little different, and it could become important.

I want to say that the consideration of the problem from the point of view of the search for a natural key is a somewhat different look. Try to look at the design of your project in this way and see what is revealed.

It seems that my explanation turned out to be muddled. I hope, nevertheless, I managed to convey to you my thought.

Now the table is peer. She already has a unique key that simply asks for the primary role. Many hundreds of inserts / deletes per second are made to the peer table and it’s just expensive to keep an extra index in the form of a primary key. So I eliminated it.

Session table For some reason, I did not rely on PHP sessions completely, but partially implemented my own. The random key is used as the primary key of this table. Not only is it just silly to use 40-character random sequences, so it’s not really necessary here at all. What acts as a natural key for entries in this table? In this project, the user was not allowed to be logged in from several computers at the same time. Um user_id? Everything else in relation to this value is secondary. I will not analyze now what follows from this simple statement. A lot of things up to the removal of the session table and implementation of another mechanism. There are many options here.

We turn to the table torrent.

A small digression for you to imagine the subject of discussion. The torrent tracker, which I developed, was at the same time the first file for the files that were distributed. The torrent table kept information about the files that were stored. These files were in the server file system, the corresponding .torrent files were created for them according to the scheme one file = one torrent, which were downloaded by users. Each torrent has a so-called info_hash, which uniquely identifies it .

This field in the peer table is called peer_info_hash. And in the torrent table this field is torrent_info_hash. torrent_id is superfluous there. Totally. Please note that the peer torrent_id table is also there. It is not clear why.

Well, the user table. It would seem that here I just could not make mistakes. Was wrong.

In the authorization system, torrent trackers use the GET parameter with a unique value for the user. In the table, this value is user_torrent_uid. Here ask me, who prevented to use this value as a natural key in one way or another? Yes, it can change. In a very rare case. So what? If 8 bytes is too long, you could take the usual random INT and convert it to text, like smart people do on Flickr. It was possible ... Yes, a lot of things could be.

Like this. Everything is obvious, isn't it? :)

Source: https://habr.com/ru/post/107834/

All Articles