Here be dragons: Memory management in Windows as it is [2/3]

Catalog:

One

Two

Three

It turns out long opuses need to be broken. And I thought “multi-part” topics are published exclusively for earning a rating :-)

I will continue right off the bat, as where it’s broken it’s broken there, and to write additional introductions to each episode above my powers.

')

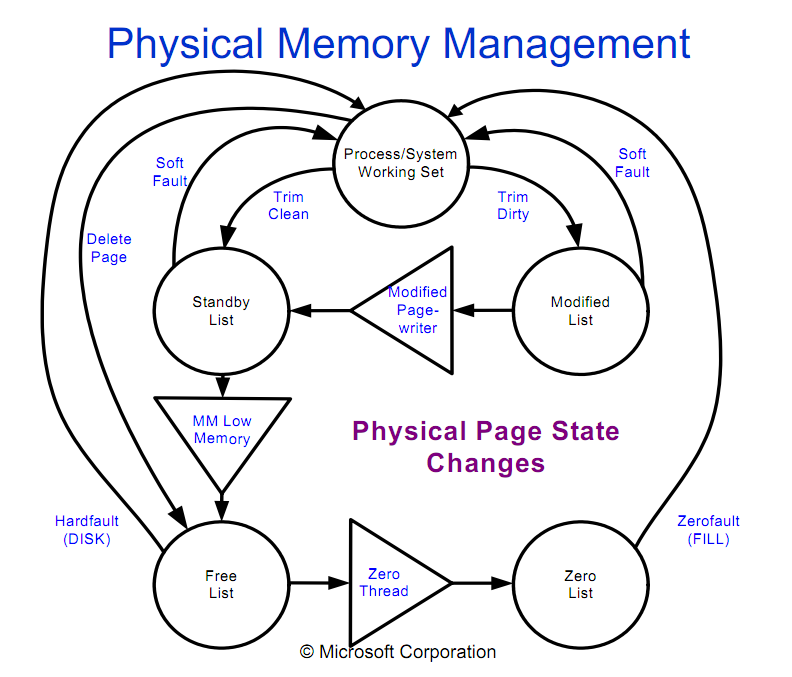

It is a little about how pages get from working sets to these lists.

Fall in different ways. One has already been considered: someone explicitly called the EmptyWorkingSet for the process. This happens infrequently. Usually this happens with the help of the so-called trimming (trimming).

When the system approaches one of the established limits for free memory, it begins to free this memory. First, the system finds the processes that maximally exceed their limit on the size of the working set. For these processes, the process of “aging” of the pages (aging) is launched, to determine which of the pages is the least used. After that, the oldest pages are “trimmed” in the standby or modified list.

From the same lecture:

The diagram shows another Delete page, which happens when the application simply releases the allocated memory - you simply can't do anything with the contents of these pages, because according to all the rules it is not valid and even if the new memory is allocated to the same address - it should be new memory.

Pagefile

Finally, I got to everyone's favorite swap file. Dragons are found here just herds. Well, let's try to get them out.

Myth: To improve performance, you need to reduce the number of calls to the page file.

In fact: To improve performance, you need to reduce the number of calls to the DISK. Pagefile is almost the same file as the rest.

Myth: Winda uses a paging file, even if the free memory is still full.

Actually: The page file can be accessed only from the modified list. In modified list - when trimming rarely used pages in overgrown applications. After the page is reset, it remains on the standby list and will not be reread. Memory is never taken from the standby list if there is still free or zeroed (that is, cached data is never thrown away, if there are still pages with no data at all). The standby list has 8 priority levels (which, to some extent, can be managed both by the application itself and by Superfetch, which dynamically manages page priorities based on an analysis of the actual use of files / pages), if there is no choice whatsoever - the first thing the Windows throws out the lowest cache priority.

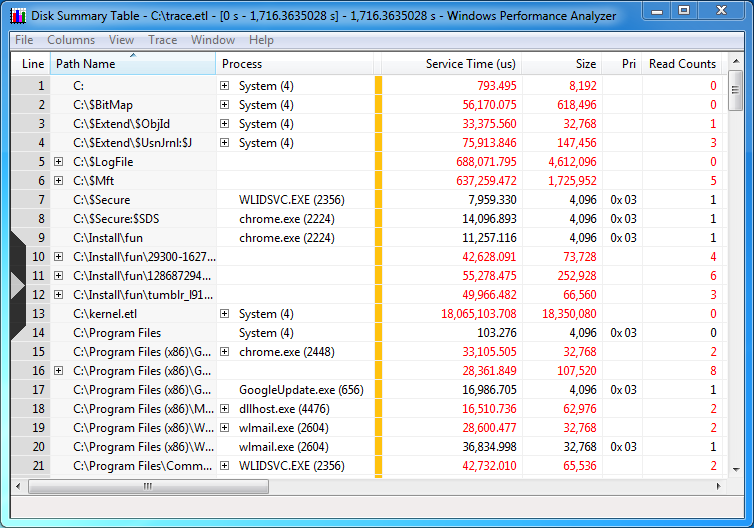

Here is an example of a half-hour job in normal mode:

Please note that the files are sorted by name and pagefile.sys would have to be between kernel.etl and “Program Files”.

Myth: But Windows itself admits that it uses a paging file.

In fact: As discussed above. Most often it is about Commit Charge, which can be called “use”, but not at all in the sense in which it is commonly understood. In most cases, if there is no need, private pages (to be dropped into a paging file) will sit on the modified list (even if they get there) for almost indefinitely long periods.

Myth: Disabling a page file improves system performance.

In fact: In rare cases, an application that misuses memory and does not lower the page priority for its threads can lead to a decrease in responsiveness, but in most cases, productivity is only enhanced by unloading unused “junk” into the closet and using the free storage space. more relevant data (thereby reducing the total number of disk accesses).

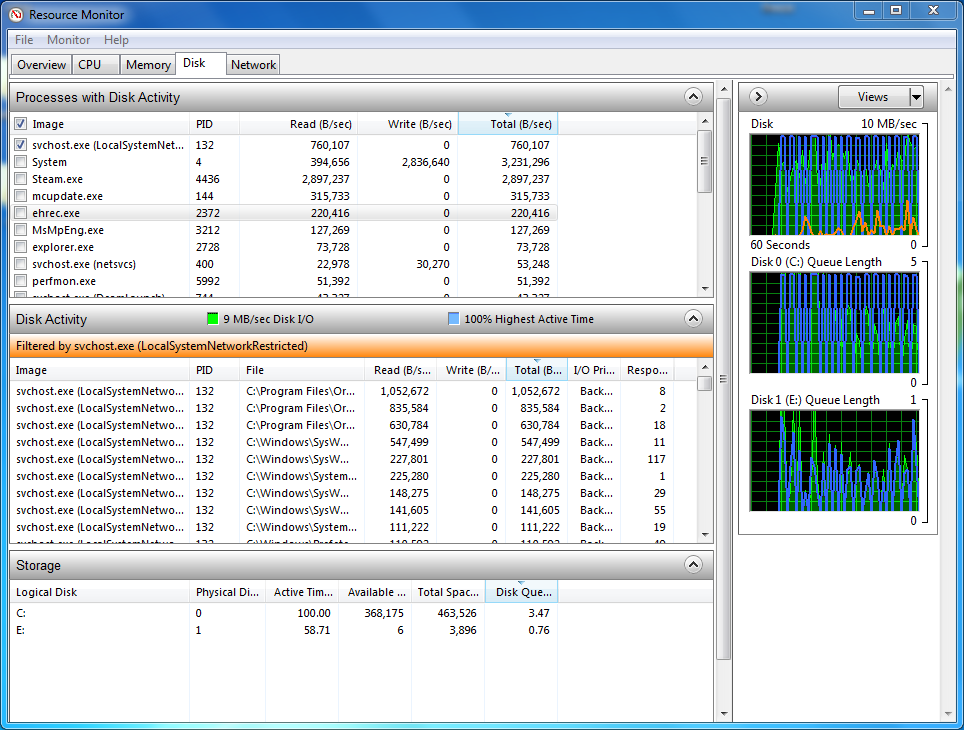

Memory and I / O Priorities

The lower the I / O priority of the stream that performs writing / reading, the less it interferes with normal operation (in general, as with the priority of flows, the round robin algorithm is used for operations that expect the highest priority, while a small part of the bandwidth is given to operations with low priority in order to combat starvation - it’s not fully translated into Russian). Run the built-in defragmenter. Resource Monitor will show 100% of the disk load, but the system at the same time, if it loses responsiveness, then I can’t notice it by eye.

I already mentioned memory priorities. Regardless of the i / o priority, there is a page priority (Page Priority). Each physical page in the system (or more precisely, the data it contains at each time point) takes precedence. When a page is moved to the Standby list, it falls into one of eight separate lists corresponding to its priority. Data with a higher priority cannot be replaced with data with a lower one.

Prefetch and Superfetch

Prefetch appeared in XP and is a way to speed up the launch of applications. Here is a utility that allows you to view the contents of pf files. As a matter of fact, there are the names and offsets of files to which the corresponding application refers in the first few (it seems 10) seconds after launch. Since the launch is often accompanied by a frantic reading of data from the disk, the ordering of these readings reduces the number of head seek and thereby speeds up the launch. Works from the SysMain service. In addition to prefetch and superfetch, this service is also responsible for ReadyBoot (which helped speed up loading in one of the previous posts), ReadyBoost (a pretty pretty useless thing on systems with a lot of RAM, but very useful on low-end systems) and ReadyDrive (this is ReadyBoost, but with the guarantee that the “flash drive” will not be removed between reboots - it allows you to greatly speed up the basic operations without resorting to a complete replacement of HDD with SSD - using so-called hybrid or H-HDD : SSD speed at HDD price).

With superfetchem, things are a bit more complicated. Few people understand its purpose, but everyone tries to blame this service for using memory during Idle and for reading “something else” from the disk. To start reading:

It is carried out with background priority and almost imperceptibly when used. If the blinking LED is annoying - it can be sealed with tape. Moreover, this reading loads the cache with the necessary data (you can look at the increase in standby after loading / waking up, even though the user does not perform any active disk operations). This data is now loaded with a low priority and with a good ordering, then it will save a few seconds and crazy head movement at the start of an application. This is most often about loading data to 6-7 priority levels (yes, low priority I / O operations are used to load pages into Standby high levels), which can be pushed out only in the most extreme case when there is not enough memory for what.

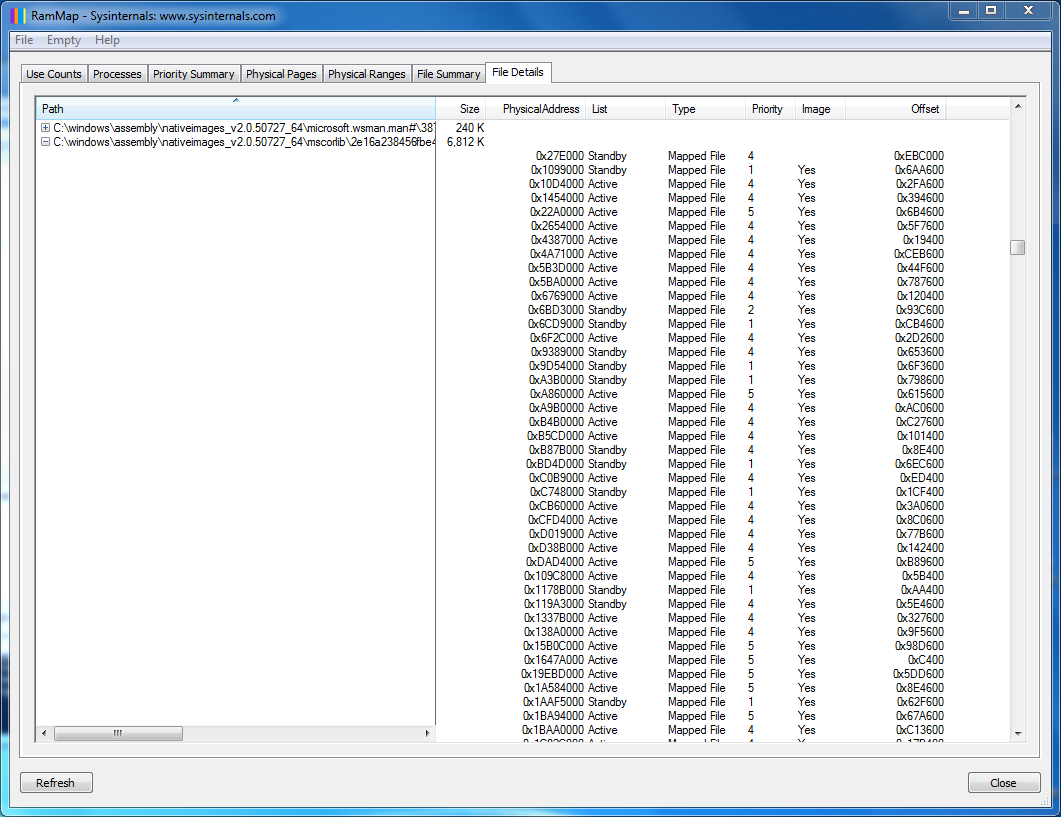

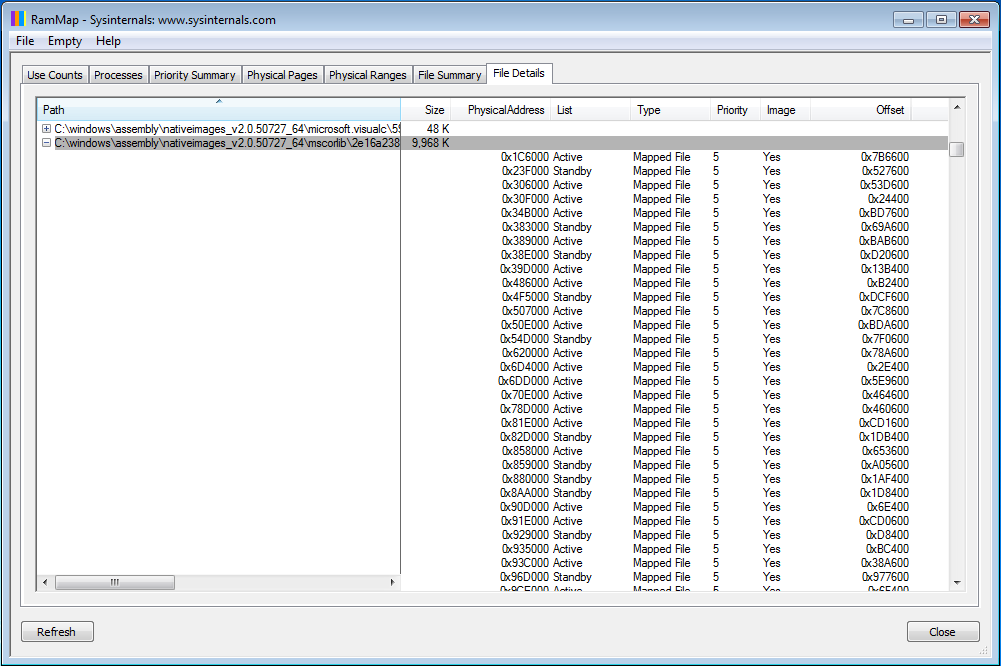

Memory this service uses to store the history of disk access / memory pages. Periodically (in Idle) he allocates more in order to analyze this data and build a long-term plan of action (as well as a bootplan for the next download). In addition, it produces a dynamic redistribution of page priorities. For example, what happens on my home machine, on which I often use a dotnet (in the form of powershell):

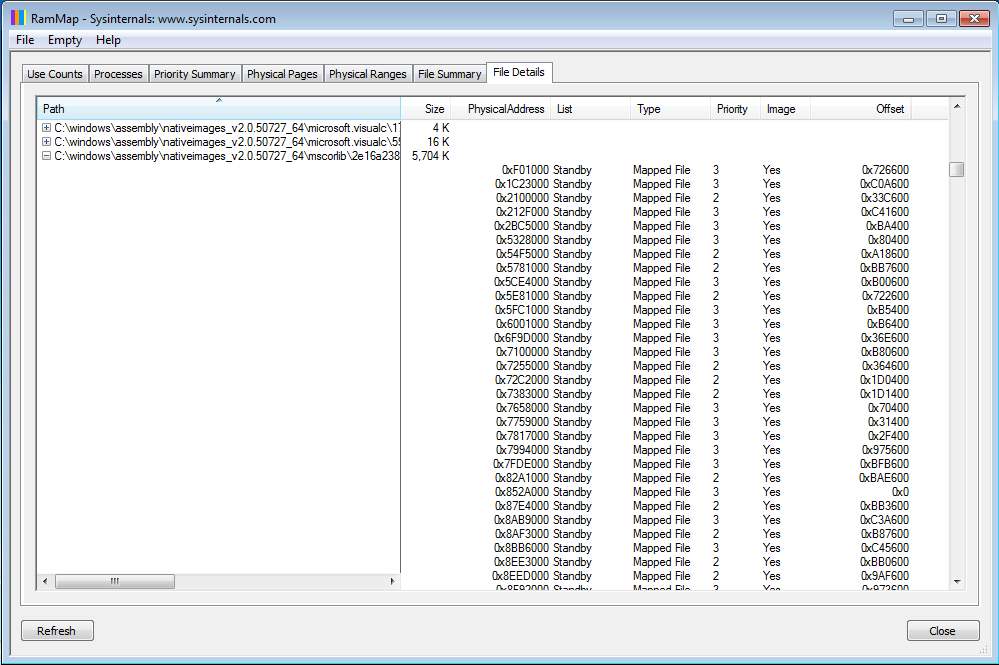

There, the list presents pages with priorities from 1 to 6 (7 is a special priority that never changes). But the newly installed virtual machine, where powershell was run once in its life a few seconds before:

The superfetch does not yet know what to do with this file, so all pages have reached the level corresponding to the priority of the thread that downloaded this file. And this is how the cache of the same file looks on the same virtual machine after several hours of inactivity (just the window minimized in the taskbar):

It is seen that all pages have lowered their priority.

Copy large files

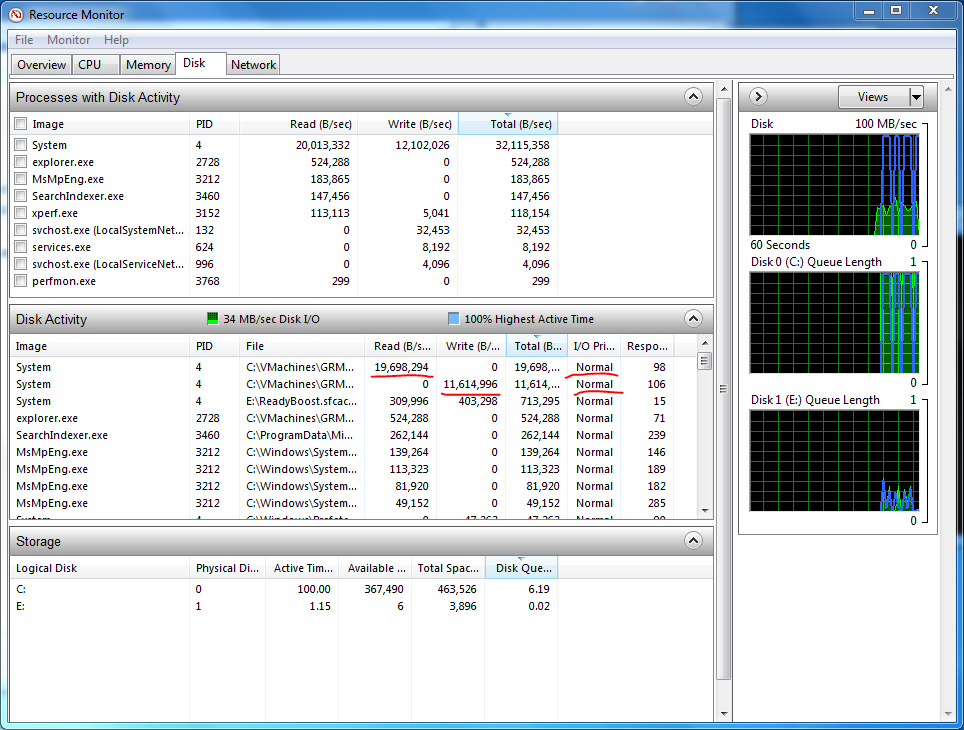

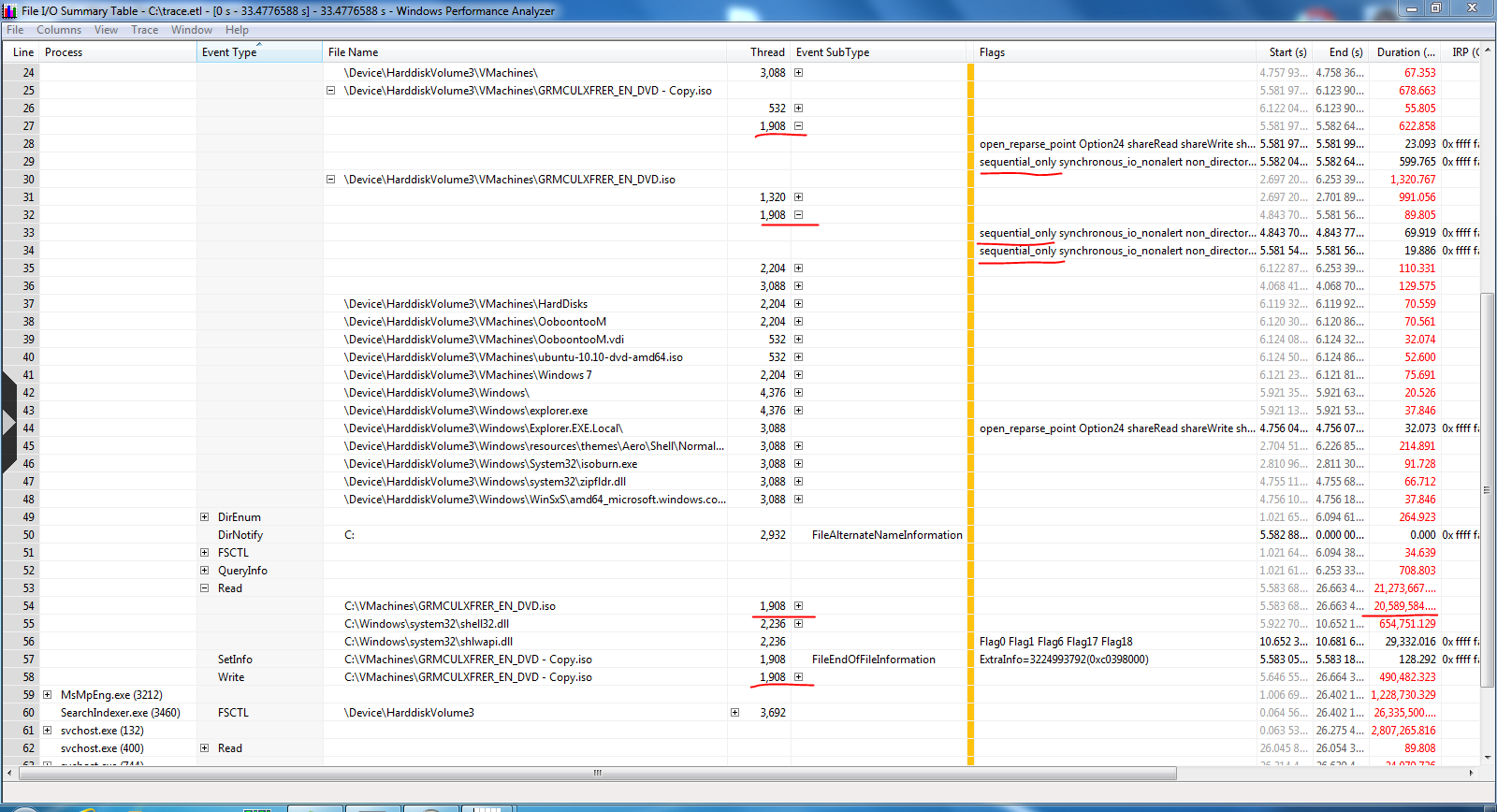

Everything is simple here. We start copying isoshnika. We see that copying is done with normal I / O priority:

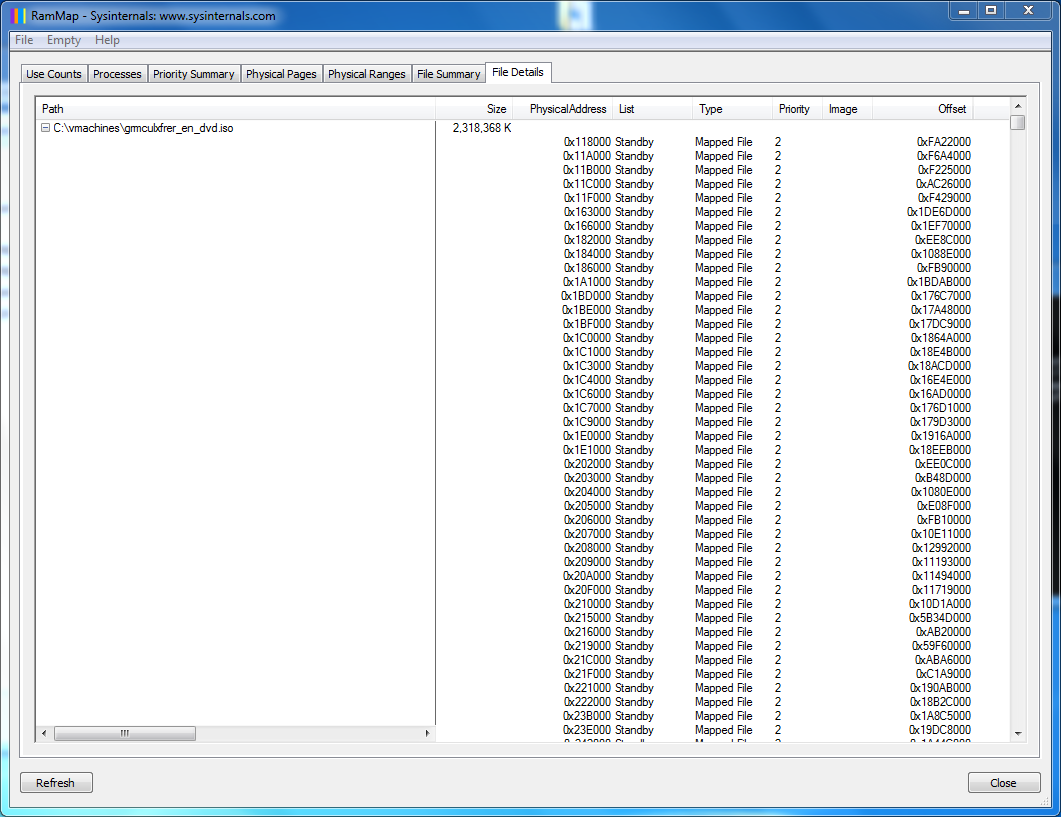

I did not save the Page priority stream, but I can say with a high degree of confidence that there is also a “norm” - that is, 5. Does this mean that copying files sooner or later throws everyone else out of the cache? Check:

Everything was cached with priority 2 even though reading was done with priority 5. What's the matter? And here's what:

For copy operations, the FILE_FLAG_SEQUENTIAL_SCAN flag is used, which leads to a decrease in cache priority compared to the base one, to using only one VACB for caching and to a more aggressive read-ahead.

Source: https://habr.com/ru/post/107607/

All Articles