Here be dragons: Memory management in Windows as it is [1/3]

Catalog:

One

Two

Three

The memory manager (and the related issues of the cache controller, I / O manager, etc.) is one of the things that everyone (along with medicine and politics) "understands". But even people who have “thoroughly studied Windows” no, no, and they start writing nonsense like (not to mention other nonsense written there as well):

Competent work with memory !!! For all the time I use my swap file has not increased by either a kilobyte. That's why Firefox with 10-20 windows is rolled up / turned around to / from the tray like a bullet. I achieved this effect on Windows with the swap disabled and with the transfer of tmp files to the RAM disk.

Or, for example, μTorrent - I have no reason to doubt the competence of its authors, but they obviously know little about the work of memory in Windows. We do not forget the comrades who produce software for monitoring performance and who do not have a clue about memory management in Windows (and who raised a tantrum about it on the Internet floor, on Ars-e even had a debriefing ). But the most amazing thing I saw connected with memory management is the advice to move the pagefile to a RAM disk:

Of my three gigabytes under the RAM disk, one was allocated (at the time when XP was still installed on the laptop), on which I created a swap of 768MB ...

The purpose of this article is not a complete description of the work of the memory manager (there is not enough space or experience), but an attempt to shed a little light on the dark realm of myths and superstitions surrounding the memory management issues in Windows.

Disclaimer

I myself do not pretend to know everything and never make mistakes, so I will gladly accept any reports of inaccuracies and errors.

')

Introduction

Where to start I do not know, so I'll start with the definitions.

Commit Size - the amount of memory that the application requested for its own needs.

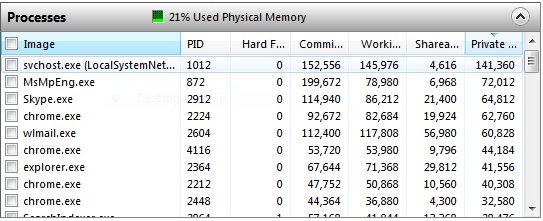

Working Set (in the picture above it is called Working Set) is a set of physical memory pages that are currently “imprinted” into the address space of the process. The working set of the System process is usually divided into a separate “System working set”, although the mechanisms for working with it are almost the same as the mechanisms for working with the working sets of other processes.

And already here misunderstanding often begins. If you look closely, you can see that Commit for many processes is less than the Working Set. That is, if taken literally, “requested” less memory than is actually used. So I’ll clarify that Commit is virtual memory, “backed up” by only physical memory or pagefile, while the Working Set also contains pages from memory mapped files. Why is this done? When NtAllocateVirtualMemory is done (or any wrappers over the heap manager, for example malloc or new), the memory is reserved (to further confuse it, it has nothing to do with MEM_RESERVE, which reserves the address space, in this case it’s about reserving It is the physical pages that the system can really allocate), but the physical pages are imprinted only when actually accessed at the allocated virtual memory address. If you allow applications to allocate more memory than the system can actually provide - sooner or later it may happen that they all ask for the real page, and the system has nowhere to get it (or rather, there is no place to save data). This does not apply to memory mapped files, since at any time the system can re-read / write the necessary page directly from / to disk (a).

In general, the total Commit Charge at any time should not exceed the system Commit Limit (roughly, the total amount of physical memory and all pagefiles) and one of the misunderstood digits on Task Manager to Vista is inclusive.

Commit Limit is not the same - it can increase with the growth of pagefiles. Generally speaking, we can assume that the pagefile is such a very special memory mapped file: the binding of a physical page in virtual memory to a specific place in pagefile occurs at the very last moment before resetting, otherwise the memory mapping and swapping mechanisms very similar.

The Working Set process is divided into Shareable and Private . Shareable is the memory mapped files (including pagefile backed), or rather those parts that are actually present in the address space of the process as a physical page (this is the Working Set after all), and Private is a heap, stacks, internal data structures of type PEB / TEB, etc. (again, I repeat just in case: we are talking only about that part of the heap and other structures that are physically located in the address space of the process). This is the minimum information with which you can already do something. For the strong spirit, there is Process Explorer, which shows even more details (in particular, which part of the Shareable is really Shared).

And, most importantly, none of these parameters separately does not allow making more or less full conclusions about what is happening in the program / system.

Task manager

The “Memory” column in the process list and almost the entire “Performance” tab are so often misunderstood that I want the Task Manager to be completely removed from the system: those who need to be able to use Process Explorer or at least the Resource Monitor , the Task Manager only hurts the rest. For a start, what is it really about

I'll start with what I already mentioned: Page File usage. XP shows the current use of pagefile and history (the funny thing is that the same numbers are named correctly in the status bar), Vista shows Page File (as a Current / Limit fraction), and only Win7 calls it what it really is is: Commit Charge / Commit Limit.

Experiment. Open the task manager on the tab with “using a pagefile”, open PowerShell and copy the following into it (for systems with a Commit Limit closer than 3 GB from Commit Charge, you can reduce 3Gb in the last line, or better increase pagefile):

add-type -Namespace Win32 -Name Mapping -MemberDefinition @" [DllImport("kernel32.dll", SetLastError = true)] public static extern IntPtr CreateFileMapping( IntPtr hFile, IntPtr lpFileMappingAttributes, uint flProtect, uint dwMaximumSizeHigh, uint dwMaximumSizeLow, [MarshalAs(UnmanagedType.LPTStr)] string lpName); [DllImport("kernel32.dll", SetLastError = true)] public static extern IntPtr MapViewOfFile( IntPtr hFileMappingObject, uint dwDesiredAccess, uint dwFileOffsetHigh, uint dwFileOffsetLow, uint dwNumberOfBytesToMap); "@ $mapping = [Win32.Mapping]::CreateFileMapping(-1, 0, 2, 1, 0, $null) [Win32.Mapping]::MapViewOfFile($mapping, 4, 0, 0, 3Gb) This leads to an instant increase in “use of swapfile” by 3 gigabytes. Re-insert "uses" another 3 GB. Closing the process instantly releases the entire “busy swapfile”. The most interesting thing is that, as I already said, memory mapped files (including pagefile backed) are shareable and do not belong to any particular process, so no process is counted in Commit Size, on the other hand pagefile backed sections are used (charged against) commit, because it is the physical memory or the paging file, and not some other extraneous file, that will be used to store the data that the application wants to place in this section. On the third hand, after the section mapping itself into the address space, the process does not touch it - therefore, the physical pages at these addresses are not imprinted and there are no changes in the Working Set process.

Strictly speaking, a pagefile is really “used” - space is reserved in it (not a specific position, but exactly space, like size), but the actual page for which this place was reserved can be in physical memory, on disk or AND TAM AND TAM at the same time. Here is such a tsiferka, admit honestly, how many times looking at the “Page File usage” in the Task Manager, you really understood what it means.

As for the tobacco's Processes - Memory (Private Working Set) is still shown by default and despite the fact that it is called quite correctly and should not cause misunderstandings among knowledgeable people - the problem is that the vast majority of people who look at these numbers do not understand at all what they mean. A simple experiment: run the RamMap utility (I advise you to download the entire bundle ), launch the Task Manager with a list of processes. In RamMap, select the menu Empty-> Empty Working Sets and look at what is happening with the memory of processes.

If someone is still annoyed with tsiferki in the Task Manager, you can put the following code in the Pavershell profile:

add-type -Namespace Win32 -Name Psapi -MemberDefinition @" [DllImport("psapi", SetLastError=true)] public static extern bool EmptyWorkingSet(IntPtr hProcess); "@ filter Reset-WorkingSet { [Win32.Psapi]::EmptyWorkingSet($_.Handle) } sal trim Reset-WorkingSet After that, it will become possible to “optimize” the use of memory with one command, for example, to “optimize” memory occupied by chrome: ps chrome | trim

Or here is the “optimization” of the memory of all chromium processes using more than 100 MB of physical memory: ps chrome |? {$ _. WS -gt 100Mb} | trim

If at least half of those who read it mark the very idea of such an “optimization” as the most obvious absurdity, then it can be said that I did not try in vain.

Cache

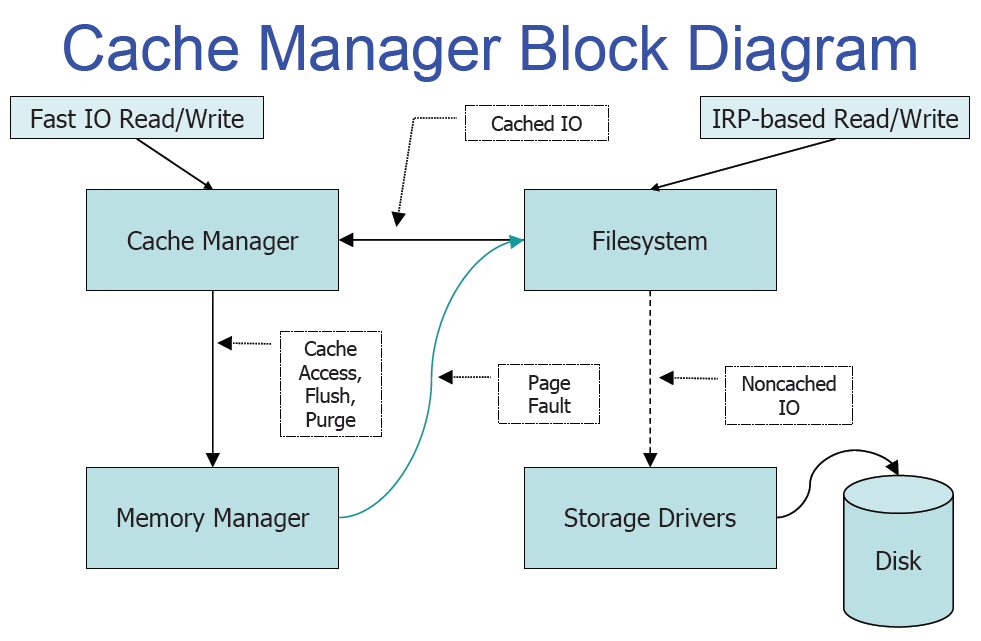

First of all, I note that the cache in Windows is not a block cache, but a file one. This gives quite a few advantages, ranging from simpler maintenance of cache coherence, for example, with online defragmentation and a simple cache cleaning mechanism when deleting a file and ending with more consistent mechanisms for its implementation (the cache controller is implemented based on the memory mapping mechanism). the basis of higher-level information about read data (for example, intelligent read-ahead for files opened for sequential access or the ability to prioritize individual aylovym Hendley).

In principle, among the shortcomings, I can only name the much more complicated life of file system developers: have you heard that writing drivers is for psychos? So, writing file system drivers is for those who even psychos consider psychos.

If, however, to describe the work of the cache, then everything is extremely simple: when the file system requests the cache manager any part of the file, the latter simply meppit part of this file into a special “slot” described by the VACB structure (you can view all the linked files from the kernel debugger extensions! filecache ) and then simply performs the memory copy operation (RtlCopyMemory). Page Fault occurs, because immediately after displaying the file in memory, all pages are invalid and then the system can either find the necessary page in one of the “free” lists or perform a read operation.

In order to understand recursion, you need to understand recursion. How, then, is the file read operation required to complete the read operation for the file itself? Here again, everything is quite simple: an I / O request packet ( IRP ) is created with the IRP_PAGING_IO flag and, upon receiving such a packet, the file system no longer accesses the cache, but goes directly to the underlying disk device for data. All these mixed slots go to the System Working Set and make up the PART of the cache.

Page from a lecture of some of the Tokyo University (oh, so I would):

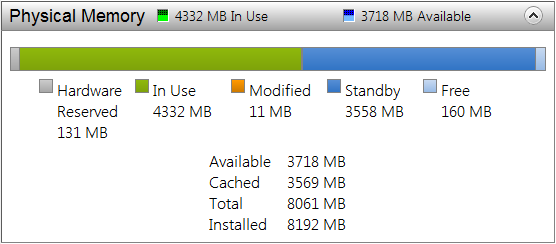

With this, the work of the cash manager itself ends and the work of the memory meneder begins. When we did the EmptyWorkingSet above, this did not lead to any disk activity, but nevertheless, the physical memory used by the process was reduced (and all the physical pages really left the address space of the process, making it almost completely invalid). So where does it go after it is taken from the process? And it leaves, depending on whether its contents correspond to what was read from the disk, into one of two lists: Standby (starting with Vista, this is not one list, but 8, about which later) or Modified:

A standby list in this way is free memory containing some kind of data from the disk (including possibly pagefiles).

If Page Fault occurs at an address that is projected onto a part of the file that is still in one of these lists, it simply goes back to the working set of the process and imprints on the desired address (this process is called softfault). If not, then, as is the case with the cache manager slots, a PAGING_IO request (called hardfault) is executed.

A modified list may contain “dirty” pages for a long time, but either when the size of this list grows excessively, or when the system sees a lack of free memory, or by a timer, the modified page writer thread wakes up and begins to drop this list in parts, and moving pages from the modified list in standby (after all, these pages again contain an unmodified copy of the data from the disk).

Upd:

User m17 gave links to Russinovich’s speech at the last PDC on the same topic (hmm, I honestly didn’t watch it before, although the post has something in common). If the understanding of English by ear allows, then reading this topic can be replaced by listening to presentations:

Mysteries of Windows Memory Management Revealed, Part 1 of 2

Mysteries of Windows Memory Management Revealed, Part 2 of 2

User DmitryKoterov suggests that transferring a page file to a RAM disk can sometimes really make sense (I’d never really guess if I didn’t write a topic), namely, if the RAM disk uses physical memory that is inaccessible to the rest of the system (PAE + x86 + 4 + Gb RAM).

The user Vir2o, in turn, suggests that although under some conditions, by sacrificing the stability of the system a ramdisk using physical memory, it is possible to write the invisible rest of the system, but this is very unlikely.

Source: https://habr.com/ru/post/107605/

All Articles