How I saved the site apachedev.ru

I decided to visit the site (which I had not visited for several years) dedicated to the insides of apache2. But I saw only a message from the hoster that the site was temporarily blocked . I made a request in Yandex: there is nothing in the search engine cache. I think that the site has been blocked for a long time. With the help of SMS, I extended the hosting for one day and saw that there were no updates since December 2007. I tried to contact the author via e-mail specified in the domain contacts. I received no answer ...

I decided to visit the site (which I had not visited for several years) dedicated to the insides of apache2. But I saw only a message from the hoster that the site was temporarily blocked . I made a request in Yandex: there is nothing in the search engine cache. I think that the site has been blocked for a long time. With the help of SMS, I extended the hosting for one day and saw that there were no updates since December 2007. I tried to contact the author via e-mail specified in the domain contacts. I received no answer ...It should be noted that I was lucky with the saved site. It is simple and logical. Copying articles, pictures is a long and boring process. I decided to copy everything at once. What we have:

')

- Source site is completely static.

- ModRewrite throws everything into a single php script

- The script searches for the file in the cache, if there is no cache, it requests from the source site

- Decided to store everything in sqlite

As a data warehouse, I chose between:

Keep files as is : i.e. if the source address of the page is /topic/123.html, then create a topic directory and place files into it. For example, so does wget. But I did not like this approach.

Make a md5 hash of the URI and save a lot of files in the data / TUTHESH.db file. Find then in this folder is impossible. Did not like.

Make md5-hash and store in sqlite database . At its core, this is the same as the previous version, but only with one file. Considered another option storage in mysql - but it really is not mobile and cumbersome. And sqlite: copied several files to a new location - the site is deployed and ready to go.

ModRewrite - I didn’t invent anything new:

RewriteCond %{REQUEST_FILENAME} !-f

RewriteCond %{REQUEST_FILENAME} !-d

RewriteRule . /index.php

# :

RewriteCond %{HTTP_HOST} !^apache2dev\.ru$

RewriteRule ^ apache2dev.ru%{REQUEST_URI} [R=301,L]How it all works - index.php

The source code can be found here: apache2dev.ru/index.phps

1. Take $ _SERVER ['REQUEST_URI'], just in case we add the address of the target site,

2. Get md5-hash

3. See if there is a requested page in the local cache.

4. If not, request on the source site, parse the response headers. We are only interested in two: 'Content-Type' and 'Last-Modified'

5. Save the headers and response in the cache. We give the client the result, after processing a bit of HTML files. Criterion Content-Type = text / html

6. Add Expires for a day.

I decided for myself that I will keep the data in the cache in its original form.

Create a database:

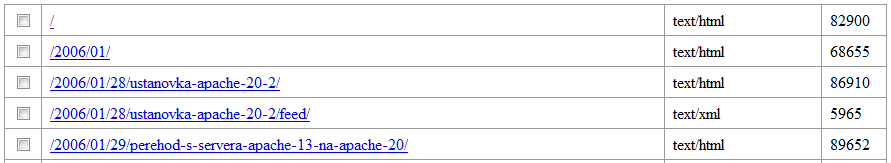

CREATE TABLE storage (loc TEXT PRIMARY KEY, heads TEXT, fdata TEXT, location TEXT);Now when I opened my website and saw a page with pictures on it, the first portion of the data was saved to a local cache.

I'm running

'wget -r .ru' 'wget -r .ru' and give a little work. wget with the -r key tries to recursively download the entire site. He doesn't do it well. For example, he does not know what javascript is. Now I open the site and run through the pages to hook on what I missed wget.In conclusion, I sketched one more script on my knee, which shows what is now in the local database. Skimming the list, delete a couple of lines.

Source: apache2dev.ru/list.phps

Put the site into operation mode, i.e. The logic is now this: if there is no data in the local cache, then we will not request anything from the source site, but simply output error 404 .

Measuring speed

I measured using ab : a 70kb picture and an arbitrary html page. The only difference is in additional preg_replace (I allowed myself to cut a bit of advertising, replaced absolute links with relative ones and inserted a warning that this page is a copy)

# ab -c 10 -n 1000 apache2dev.ru/images/ff_adds/validator.gif

Requests per second: 417.32 [# / sec] (mean)

# ab -c 10 -n 1000 apache2dev.ru/2006/01/28/ustanovka-apache-20-2

Requests per second: 29.66 [# / sec] (mean)

The result suits me perfectly.

Pros and cons of this approach:

- "Save" the site need only once, and then you can forget about it. Wordpress I'm somehow afraid: you need to follow it, update it, etc.

- The site fit in 7 files, convenient to copy; sqlite base - 20mb

- Minimum system requirements

- The most important disadvantage (in my opinion) is the fact that not every site can be saved in a similar way. There are sites walking on the links of which you can never stop.

Possible improvements:

- The database could be stored in a processed form (preg_replace, etc.)

- And the data could be stored in a compressed form. Check Accept-Encoding: if gzip is there - output as is, otherwise unpack

- If you need high performance, you can ask nginx to cache data produced by the php script

- Pre-prepare the page URI. For example, delete all? From = top10,? From = ap2.2, now the same pages (but with different addresses) are saved several times.

- Pass the parameter max_redirects = 0 to the file_get_contents function, handle the error and save the Location header. Now the user, requesting /get.php?=/download/123.pdf, will immediately receive the data, as if the pdf file is located at this address. the file_get_content function (in our index.php file) having seen the redirect, will automatically execute it without saying a word to anyone. And according to the idea, the user should receive a response from the HTTP / 1.1 302 Found server, and make another request directly to the file itself.

By the way, the site is about apache, which is referred to: apache2dev.ru

Complete set: apache2dev.ru/catcher.tgz

Source: https://habr.com/ru/post/105826/

All Articles