Cunning with Squid in the corporate network

Recently I came across one rather amusing article (http://habrahabr.ru/blogs/sysadm/28063/), which described the possibility of creating a cluster of proxy servers to increase the total bandwidth. Initially it seemed that the place of this interesting decision was in the museum of outdated technologies, however, after thinking it over, it came to more interesting conclusions.

The fact is that our office, like me, is geographically located in a zone with rather expensive Internet and not very sensible in terms of IT by general management. As a result, a megabit channel has a maximum of two per 500 people; so happy is the time at which personal speed rises above 128 kbps. And this is more than sad.

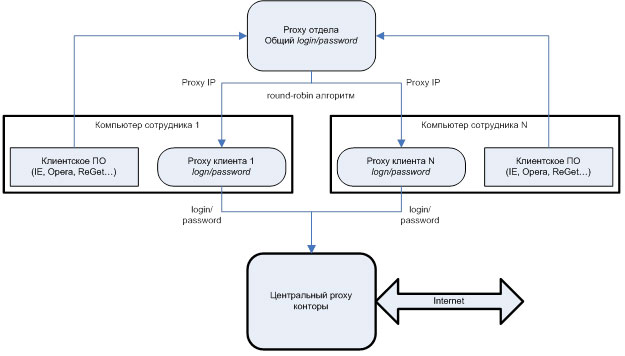

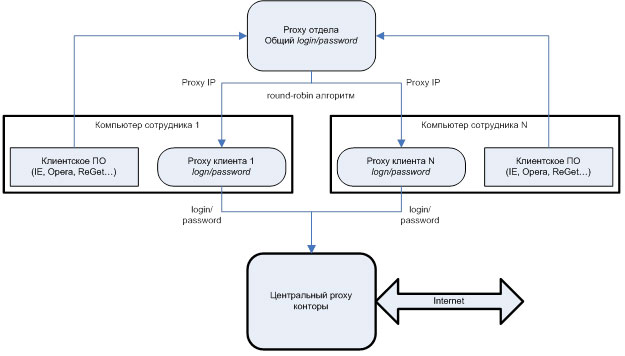

Fortunately, I work in an adequate department, open to everything new and, importantly, also dissatisfied with the speeds available. Each employee is assigned a computer and an entry in Active Directory, according to the credentials of which the central proxy gives him his own slice of skinny Internet. As you can see, this is a fairly favorable environment in order to take advantage of the cascading of proxy servers. I will not describe the steps taken to configure squid-s - this is so well described in the above material. I will show only a picture illustrating the general idea, and comment on it a bit:

Squid is installed on the computers of the department employees concerned, which is configured to receive Internet from the central proxy server of the office using the appropriate credentials. This is done with the following squid configuration line:

')

cache_peer proxy address parent port 0 no-query default login = user: password

Since the password is stored in the configuration file, it is strongly recommended to restrict access to it.

Further, the proxy-server of the department rises, which scatters incoming requests on employees' proxies using fairly fair round-robin algorithm (Round-robin is the algorithm of load distribution of the distributed computing system by iterating its elements in a circular cycle). So that the north knows which proxies are available to it, the following lines are added to its configuration:

cache_peer employee proxy parent port 0 no-query round-robin

Finally, client software, such as browsers, download managers, etc., is configured to use a freshly installed proxy server for the department.

If there is a desire to improve the security of the solution obtained, you can use various methods, the simplest one was used by me. Employees' proxies are configured so that only employees and the proxy department can access them:

acl localhost src 127.0.0.1/32

acl proxynet src proxy department

http_access allow proxynet

http_access allow localhost

http_access deny all

In order to cut off unnecessary users from the resulting cluster, the following proxy server configurations are implemented in the department's proxy server (the paths are correct if squid is used in Windows installed in the default folder):

auth_param basic program c: /squid/libexec/ncsa_auth.exe c: / squid / etc / passwd

auth_param basic children 5

auth_param basic casesensitive off

acl Authenticated proxy_auth REQUIRED

http_access deny! Authenticated

And in the file c: / squid / etc / passwd, login / hash_pairs are used for authentication.

This could have been the end of this article, if it were not for one important aspect that pushed me to write it. According to domain politicians, we have to periodically change our passwords, and, of course, some colleagues periodically forget to synchronize these changes in their proxy configuration file, which is expressed in a not very pleasant symptom, namely: periodically requesting authentication data from all cluster users when opening pages . This is very annoying, so it was decided to write a diagnostic utility, which, settling on a machine from the proxy department, would check the availability and correct operation of all sponsored proxy servers upon request.

The algorithm is rather trivial, but I will give it here. The GetPeers method, getting as input the name of the department proxy configuration file, gets a list of all proxy servers:

The IsPeerAvaliable method checks the state of the proxy server with the specified URL. The PeerStatus enumeration is unambiguously not academically complete, as is the status determination method, but it is quite sufficient for “any practical purposes”:

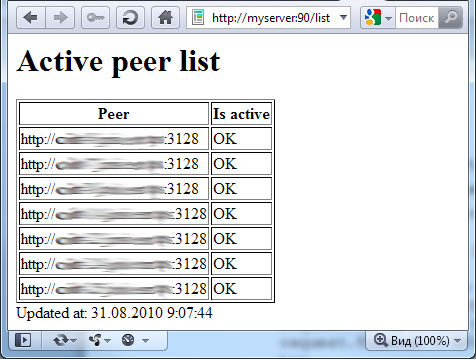

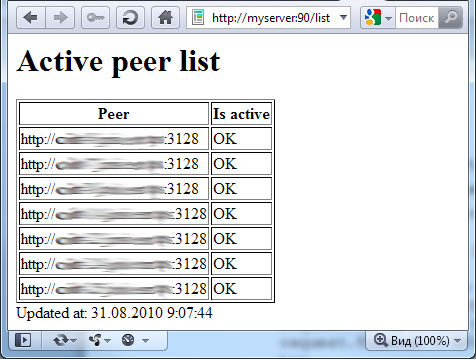

This utility can be implemented as a console or graphical application, or as a service that provides a web interface. I chose the last option as the simplest from the point of view of operation, the results look like this:

What conclusions on this all can be done?

The fact is that our office, like me, is geographically located in a zone with rather expensive Internet and not very sensible in terms of IT by general management. As a result, a megabit channel has a maximum of two per 500 people; so happy is the time at which personal speed rises above 128 kbps. And this is more than sad.

Fortunately, I work in an adequate department, open to everything new and, importantly, also dissatisfied with the speeds available. Each employee is assigned a computer and an entry in Active Directory, according to the credentials of which the central proxy gives him his own slice of skinny Internet. As you can see, this is a fairly favorable environment in order to take advantage of the cascading of proxy servers. I will not describe the steps taken to configure squid-s - this is so well described in the above material. I will show only a picture illustrating the general idea, and comment on it a bit:

Squid is installed on the computers of the department employees concerned, which is configured to receive Internet from the central proxy server of the office using the appropriate credentials. This is done with the following squid configuration line:

')

cache_peer proxy address parent port 0 no-query default login = user: password

Since the password is stored in the configuration file, it is strongly recommended to restrict access to it.

Further, the proxy-server of the department rises, which scatters incoming requests on employees' proxies using fairly fair round-robin algorithm (Round-robin is the algorithm of load distribution of the distributed computing system by iterating its elements in a circular cycle). So that the north knows which proxies are available to it, the following lines are added to its configuration:

cache_peer employee proxy parent port 0 no-query round-robin

Finally, client software, such as browsers, download managers, etc., is configured to use a freshly installed proxy server for the department.

If there is a desire to improve the security of the solution obtained, you can use various methods, the simplest one was used by me. Employees' proxies are configured so that only employees and the proxy department can access them:

acl localhost src 127.0.0.1/32

acl proxynet src proxy department

http_access allow proxynet

http_access allow localhost

http_access deny all

In order to cut off unnecessary users from the resulting cluster, the following proxy server configurations are implemented in the department's proxy server (the paths are correct if squid is used in Windows installed in the default folder):

auth_param basic program c: /squid/libexec/ncsa_auth.exe c: / squid / etc / passwd

auth_param basic children 5

auth_param basic casesensitive off

acl Authenticated proxy_auth REQUIRED

http_access deny! Authenticated

And in the file c: / squid / etc / passwd, login / hash_pairs are used for authentication.

This could have been the end of this article, if it were not for one important aspect that pushed me to write it. According to domain politicians, we have to periodically change our passwords, and, of course, some colleagues periodically forget to synchronize these changes in their proxy configuration file, which is expressed in a not very pleasant symptom, namely: periodically requesting authentication data from all cluster users when opening pages . This is very annoying, so it was decided to write a diagnostic utility, which, settling on a machine from the proxy department, would check the availability and correct operation of all sponsored proxy servers upon request.

The algorithm is rather trivial, but I will give it here. The GetPeers method, getting as input the name of the department proxy configuration file, gets a list of all proxy servers:

private static IEnumerable<String> GetPeers(string configFileName)

{

char[] separators = new char[]{' ', '\t'};

List<String> strings = new List<String>();

StreamReader reader = new StreamReader(configFileName, Encoding.Default);

while (!reader.EndOfStream)

{

string st = reader.ReadLine().Trim();

if (st.ToLower().StartsWith("cache_peer"))

{

string[] substrings = st.Split(separators);

strings.Add("http://" + substrings[1] + ":" + substrings[3]);

}

}

return strings;

}

The IsPeerAvaliable method checks the state of the proxy server with the specified URL. The PeerStatus enumeration is unambiguously not academically complete, as is the status determination method, but it is quite sufficient for “any practical purposes”:

private static PeerStatus IsPeerAvaliable(string peer)

{

WebRequest request = WebRequest.Create("http://ya.ru");

request.Method = "GET";

request.Proxy = new WebProxy(peer);

request.Timeout = 5000;

try

{

request.GetResponse();

return PeerStatus.OK;

}

catch (WebException ex)

{

if (ex.Status == WebExceptionStatus.ConnectFailure)

return PeerStatus.Offline;

if (ex.Status == WebExceptionStatus.ProtocolError)

return PeerStatus.AuthError;

return PeerStatus.Error;

}

catch (Exception ex)

{

return PeerStatus.Error;

}

}

This utility can be implemented as a console or graphical application, or as a service that provides a web interface. I chose the last option as the simplest from the point of view of operation, the results look like this:

What conclusions on this all can be done?

- The speed and responsiveness of the Internet has really increased: especially when multi-threaded loading and opening heavy pages with a bunch of pictures. For example, the download speed from 10-12 Kb / s increased to 40-50.

- In this case, the optimal number of participants in the proxy cluster should probably be chosen empirically: it is likely that adding the Nth participant will not do any good (the effectiveness of such a cluster in the case when the entire office is sitting in it is obviously 0).

- I would like to express particular respect in this case to the general management, which by its wise decisions forces employees to spend their working time on solving third-party, in general, issues.

Source: https://habr.com/ru/post/103098/

All Articles