Until the thunder clap ...

It is possible that someone has already got into an unpleasant situation - when for some reason a RAID controller fails, or simply the array “crumbles”. Especially often this happens with cheap controllers built into the motherboard. I'll tell you a small but instructive story that happened to me at the dawn of my admin's career.

So, one day everything happened that could only appear in a nightmare.

One fine morning, our tester approaches me and asks: “What happened to TFS?” TFS is a Team Foundation Server, a kind of web application tied to MS Visual Studio, used by programmers to track bugs, and maybe for something else, at least for me this TFS was a “black box”.

The server on which this application was launched was a normal system unit, with an external SATA RAID controller Adaptec 2410S. On three SATA-disks with a capacity of 149GB, a RAID5 array was created, on which everything was installed. Batteries from the controller, of course it was not. Yes, now tomatoes will fly into me, but alas - the server was raised before me, they did not give me money for the brand (apparently) money, and even then I didn’t particularly think about it. And in vain.

So, I, not knowing anything bad, ping the server on which TFS was installed. Not pinged. Swearing to myself, I went to the server to check the cables - everything seems to be in order, the server is on, the “Link” diode on the network card is on. I go to the server with KVM'a - and here it is: "Non-system disk or disk error". With the help of the Holy Trinity (Ctrl-Alt-Del), I restart the server. POST passes, the RAID controller is initialized and suddenly ... “Array # 0 has missing required members. Found 0 arrays. "Now I'm starting to swear out loud. I press Ctrl-A, I go into the controller BIOS.

I look - and the status of the array - FAILED. Two of the three discs are shown in gray.

“So what are you, white northern chanterelle!” - I thought. Began to think what actually happened. The controller seems to work. Disks too, as if visible. I start checking both “problematic” disks with the utility in the controller BIOS. Verification succeeds, “0 errors found”. Reboot - again, the array was not found.

Having written a letter of resignation in advance, I unwind the server case, put it next to my computer, connect hard drives (well, they were SATA and I connected them directly to the motherboard) and boot.

The screws seem to be defined. Now we need to somehow pull out the data from them, namely the MS SQL Server 2005 database in which the TFS data was stored. The biggest problem was that it was necessary in some way to recreate the structure of the RAID-array, while not losing data.

Wandering on the Internet, I came across a prog Runtime RAID Reconstructor (www.runtime.org).

In the description it was said that it can automatically determine the structure of the array. I download, run.

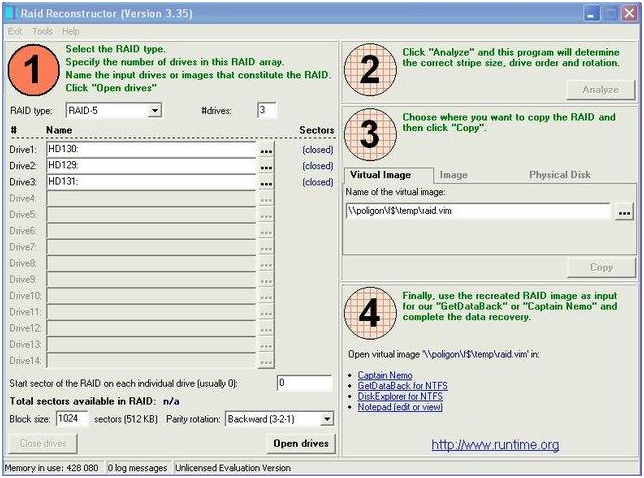

The program window looks like this:

In the left part of the window, I chose my disks. By the way, this program allows you to create complete disk images and then connect them instead of "live" disks - it can be useful when the disk starts to "crumble" and can fail at any minute.

Then I clicked “Open Drives” and “Analyze” to analyze the structure of the array.

In the analysis wizard, by the way, there is no block size of 512Kb (which seems to have been used by me), but you can add a Custom Size, which I actually did. I left the number of sectors for analysis by default (10,000).

The analysis was successful, the structure of the array was determined.

After that, she created the Virtual Image File - a small (less than 1Kb) file with the * .vim extension, which describes the structure of the array.

The same program then offers to open the file with various utilities from Runtime - Captain Nemo, GetDataBack, Disk Explorer. I needed, apparently, Captain Nemo: the task was to remove data from disks. I shake. I bet.

I open my vim to her - and, oh, a miracle! - I saw a full tree of folders! I found the necessary database files, saved them through Captain Nemo to my screw and connected it on a freshly installed TFS, and it all worked! You can say, "carried." The statement of dismissal went to the shredder.

Now - let's summarize.

As a server, the usual systemic was used.

The system together with the database lay on the same 3-disk RAID5 array, on the same partition.

The array was built on 3 SATA screws, using an Adaptec 2410SA controller.

The reasons for the failure could not be established. According to my suspicions, the cause was a power failure. The RAID controller is not equipped with a BBU (backup battery) and, apparently, a power failure occurred during writing to the disk, resulting in some loss of information about the structure of the array, and the controller began to consider 2 hard drives as failed.

The moral of this story is: You can’t completely trust RAID! Especially - made on cheap hardware. RAID will never replace backup to separate media.

I hope this opus will help someone who falls into this situation. But even more, I hope that it will help not to fall into it. As you know, admins are divided into those who do not backup, and those who already do. If at least one of those who have read this article goes into the second, bypassing the first, I will assume that my goal has been fulfilled. On this optimistic note, I will probably finish.

So, one day everything happened that could only appear in a nightmare.

One fine morning, our tester approaches me and asks: “What happened to TFS?” TFS is a Team Foundation Server, a kind of web application tied to MS Visual Studio, used by programmers to track bugs, and maybe for something else, at least for me this TFS was a “black box”.

The server on which this application was launched was a normal system unit, with an external SATA RAID controller Adaptec 2410S. On three SATA-disks with a capacity of 149GB, a RAID5 array was created, on which everything was installed. Batteries from the controller, of course it was not. Yes, now tomatoes will fly into me, but alas - the server was raised before me, they did not give me money for the brand (apparently) money, and even then I didn’t particularly think about it. And in vain.

So, I, not knowing anything bad, ping the server on which TFS was installed. Not pinged. Swearing to myself, I went to the server to check the cables - everything seems to be in order, the server is on, the “Link” diode on the network card is on. I go to the server with KVM'a - and here it is: "Non-system disk or disk error". With the help of the Holy Trinity (Ctrl-Alt-Del), I restart the server. POST passes, the RAID controller is initialized and suddenly ... “Array # 0 has missing required members. Found 0 arrays. "Now I'm starting to swear out loud. I press Ctrl-A, I go into the controller BIOS.

I look - and the status of the array - FAILED. Two of the three discs are shown in gray.

“So what are you, white northern chanterelle!” - I thought. Began to think what actually happened. The controller seems to work. Disks too, as if visible. I start checking both “problematic” disks with the utility in the controller BIOS. Verification succeeds, “0 errors found”. Reboot - again, the array was not found.

Having written a letter of resignation in advance, I unwind the server case, put it next to my computer, connect hard drives (well, they were SATA and I connected them directly to the motherboard) and boot.

The screws seem to be defined. Now we need to somehow pull out the data from them, namely the MS SQL Server 2005 database in which the TFS data was stored. The biggest problem was that it was necessary in some way to recreate the structure of the RAID-array, while not losing data.

Wandering on the Internet, I came across a prog Runtime RAID Reconstructor (www.runtime.org).

In the description it was said that it can automatically determine the structure of the array. I download, run.

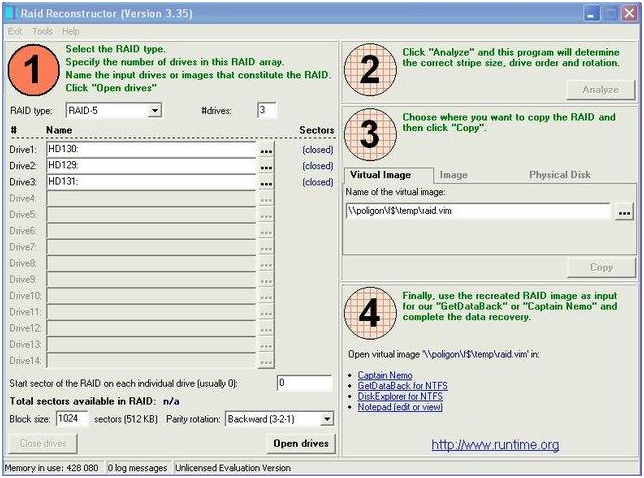

The program window looks like this:

In the left part of the window, I chose my disks. By the way, this program allows you to create complete disk images and then connect them instead of "live" disks - it can be useful when the disk starts to "crumble" and can fail at any minute.

Then I clicked “Open Drives” and “Analyze” to analyze the structure of the array.

In the analysis wizard, by the way, there is no block size of 512Kb (which seems to have been used by me), but you can add a Custom Size, which I actually did. I left the number of sectors for analysis by default (10,000).

The analysis was successful, the structure of the array was determined.

After that, she created the Virtual Image File - a small (less than 1Kb) file with the * .vim extension, which describes the structure of the array.

The same program then offers to open the file with various utilities from Runtime - Captain Nemo, GetDataBack, Disk Explorer. I needed, apparently, Captain Nemo: the task was to remove data from disks. I shake. I bet.

I open my vim to her - and, oh, a miracle! - I saw a full tree of folders! I found the necessary database files, saved them through Captain Nemo to my screw and connected it on a freshly installed TFS, and it all worked! You can say, "carried." The statement of dismissal went to the shredder.

Now - let's summarize.

As a server, the usual systemic was used.

The system together with the database lay on the same 3-disk RAID5 array, on the same partition.

The array was built on 3 SATA screws, using an Adaptec 2410SA controller.

The reasons for the failure could not be established. According to my suspicions, the cause was a power failure. The RAID controller is not equipped with a BBU (backup battery) and, apparently, a power failure occurred during writing to the disk, resulting in some loss of information about the structure of the array, and the controller began to consider 2 hard drives as failed.

The moral of this story is: You can’t completely trust RAID! Especially - made on cheap hardware. RAID will never replace backup to separate media.

I hope this opus will help someone who falls into this situation. But even more, I hope that it will help not to fall into it. As you know, admins are divided into those who do not backup, and those who already do. If at least one of those who have read this article goes into the second, bypassing the first, I will assume that my goal has been fulfilled. On this optimistic note, I will probably finish.

')

Source: https://habr.com/ru/post/100744/

All Articles