How to improve the efficiency of the data center?

Carefully reading Habr in the last few months could not help but notice that the topic of energy efficiency, saving resources and “alternative energy sources” is rising here more and more often (for example: once and twice ). Many readers still for some reason believe that the “green” technology in IT is some kind of “ moroning snickering Americans ” who fight against the “global cooling” in the winter, against the “global warming” in the summer, and all that is not for us, we will be fine without it.

However, apart from “environmental friendliness”, which is undoubtedly important, the main role in such a large interest in energy-saving technologies is primarily played by simple and quite obvious, mercantile, practical considerations. If it seems to you that saving electricity in a datacenter is just a “fooling of ecologists”, then you have never had to deal with really big projects and really big electricity bills, or face the fact that the power supply limits in the data center are stopping the growth of your IT infrastructure, and, as a result, the profits of your company.

For example, at the end of 2008, the Facebook company paid about a million dollars monthly only for electricity bills in its data centers.

The transition to higher energy efficiency standards in the data center and saving resources, primarily power and cooling, can bring companies savings, expressed in full-fledged, and considerable, dollars.

NetApp has long and successfully engaged in research on finding effective IT solutions and “started rebuilding from itself” by using these solutions in the development of its own data centers.

That is what today's article is about.

How to improve the efficiency of the data center?

Posted by: Dave Robbins, NetApp

')

Since 2005, the amount of electricity consumed by IT equipment (servers, storage systems, network infrastructure) has increased by an average of six to eight times per $ 1,000, dramatically increasing the so-called “hidden” costs of data centers, and in many cases causing significant problems . As a result, many corporate data centers are forced to look for new ways to become more efficient in terms of energy consumption and the degree of utilization of existing assets, such as storage and server resources.

If you intend to install and use the latest IT solutions and technologies, without worrying about the efficiency of use, you run the risk of spending an unexpectedly large amount of electricity (which can cost your company a pretty tidy amount) to ensure their work . But more importantly, if you do not increase the efficiency and degree of use of the equipment, it may be that the data center in which you plan to stay will simply not be able to provide you with the necessary volumes of power supply and cooling. In practice, 42–43% of all data centers in Europe and the United States are already working under power supply limits.

In this article, I will describe this problem in more detail, and consider what means you have to increase the efficiency of using data centers, and also provide some of our IT practices to increase efficiency, and I will describe a number of consequences for storage administrators.

Hidden costs in IT

If you install an inexpensive server in a tier-2 data center, the direct cost of its power supply and cooling per year will be, according to our calculations, about $ 8,000. The associated transaction costs of the data center are about $ 1000-1300 per year.

Most IT departments do not include these costs in the business plans for installing a new server. In most cases, most IT organizations do not see electricity bills until the data center rests on the limits set by the energy company. For this reason, they often simply do not know that such “hidden costs” exist.

Since these costs are “invisible” (most often they go all together under the column “other expenses”), the management of the data center has no ways and methods to manage them.

A survey of many companies in the United States and the European Union showed:

- 47% do not monitor server load.

- 55% of data center managers do not keep track of amounts in their electricity bills.

- 43% of data centers in the European Union work in terms of the limit on energy consumption.

- 42% of existing data centers in the US, at the current rate of growth, will reach the limit on power supply over the next 18-24 months.

The key to your data center's readiness for the future, as well as controlling “hidden costs”, is to increase energy efficiency and increase the use of available resources so that, while maintaining the growth rate of the company, to slow down the volume of costs for extensive infrastructure expansion.

This can be achieved in several ways:

- Support data center higher "energy density"

- Modernization of the current or development of a new effective "network core" system

- Server and storage virtualization for easier scaling and relocation of applications

Over the past years, we at NetApp have gained tremendous experience in all three aspects of improving efficiency, creating our own datacenters for our internal IT needs.

Data center redesign to achieve greater efficiency and density

In order to improve the efficiency and density indicators in our data centers, we found that we need to change many of the old and familiar approaches to their construction. Here are some points from what we have changed, achieving higher efficiency:

- Although the management and maintenance processes of the equipment in the data center and the company's overall IT strategy are typically “discrete functions”, we found that their coordinated work allows us to achieve more significant results and get a more efficient solution.

- Raised floors in the datacenter are a relic of mainframe times. The “cold” and “hot” zones and channels ( hot / cold aisles ) provide a supply of cooling (as well as power) from above, which is more energy efficient.

- High-tech tasks can sometimes be solved using low-tech solutions. Simple vinyl film curtains serve as a physical barrier between the “cold” and “hot” corridors of air movement, preventing them from unwanted mixing and saving us more than 1 million kilowatts per year.

- Datacenter does not have to be too cold. ( I already wrote about this aspect in Habré in a separate article, note track ) We gradually raised the temperature of the air supplied to the cooling equipment to 21º C, and the air flow temperature in the “hot corridor” was 35º C. As a result, we spend less energy on cooling and extended the cooling period with the usual “external”, “outboard” air up to 65% of the time per year.

Datacenter British Telecom, one of NetApp's customers. The construction of transparent plastic curtains separating the “cold” and “hot” zones, and preventing uncontrolled mixing of air streams of different temperatures, is clearly visible.

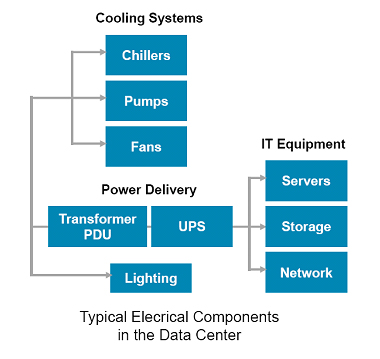

One of the most important metrics of data center efficiency is the power usage effectiveness (PUE) parameter, the ratio between the total power consumed by the data center and the power that is consumed only by IT equipment. Possible consumers of electricity in the data center are shown in Figure 1 below.

Figure 1) Consumers of electricity in the data center.

The current PUE figure for a well-designed data center today is approximately 2.0: other data center maintenance equipment consumes as much electricity as all IT equipment.

Other important performance parameters of a data center include:

- What power is supplied to the cabinet. A typical value adopted by most data centers today is 3kW. In other words, equipment placed in a cabinet consumes a maximum of 3kW of electrical power.

- How much space is allocated to the closet. The equipment placement cabinet occupies 2.6 square meters of space.

By using techniques similar to those described above, NetApp is able to lower PUE rates in its data centers below the industry standard PUE = 2.0, while at the same time ensuring greater energy density per cabinet and taking up much less space.

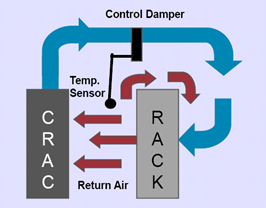

For example, our second-generation datacenter uses an airflow regulator and a special controlled damper in the “cold” channel, which regulates the cooling intensity, preventing it from oversupply, and keeping the cooling capacity that is exactly necessary for this equipment load, which allowed us to achieve PUE indicators of 1 , 40 with 801 cabinet, with a supply of 3.5KW per cabinet. This saves the company an average of $ 1.7 million per year, compared with the same data center, working with PUE equal to the standard 2.0.

Figure 2) The distribution pattern of air flow in the data center of the second generation of NetApp.

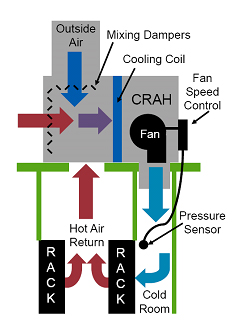

Our third-generation data center project is implemented in a data center for 720 cabinets that consume 8kW / cabinet with a PUE parameter of 1.30, which saves the company $ 4.3 million per year when compared to a “standard data center” with a PUE of 2.0. We are currently also deploying a combined data center (for IT services plus engineering tasks), which will house 1,800 cabinets with a 12kW / cabinet power supply, with an estimated PUE of 1.2. Hot air from this data center will heat the office in the winter months.

Note Track: This is a very high figure. Earlier, I wrote a post about AISO hosting company autonomously powered by solar panels and battery power at night. The PUE indicator of the hosting datacenter they created, achieved with extremely strict power saving mode, was 1.14, which can be considered a kind of record and close to the maximum achievable rates on Today.

That is, all non-IT-shny services, including cooling systems, uninterruptible power supply, ventilation, lighting and other things consume only about 0.14 from the consumption of the actual IT part of the data center equipment.

Figure 3) Third generation design of NetApp datacenters.

You can read more about the efficiency strategy used in our Tech OnTap article , as well as in the published white paper .

Third-generation NetApp data center at Research Triangle Park (RTP). Plastic curtains are visible, separating the “cold” air movement channels (from the front of the racks) and “hot” (from the rear), and preventing their undesirable mixing, as well as the cable entries running from above.

Increasing the efficiency and density of placement in your datacenter is not enough by itself. You will also need to increase the “utilization rate” of IT assets already deployed in your data center by redesigning, if necessary, the network infrastructure, consolidating as many resources as possible, and virtualizing both servers and storage systems. You can read about one of the approaches to building a virtualized data center using a combination of the Cisco Unified Compute System (UCS) server system and the NetApp universal storage in this article .

Network core redesign

In the project of redesigning the core of the data center network, we had two goals:

- Standardize "universal network factory".

- Redesign the network infrastructure and topology, eliminating isolated application islands

The advent of Converged Network Adapters (CNA) and the Fiber Channel over Ethernet (FCoE) protocol made it possible to combine all of our existing Fiber Channel devices into a single network fabric. The elimination of the network infrastructure of the storage network into several technologically incompatible segments has reduced energy consumption in the data center. With a single cable, you can connect to any network —SAN, LAN, or HPC — that helps you quickly deploy and launch new applications and services.

Most current networks are designed to isolate business applications from traditionally understood security and performance considerations. Unfortunately, such isolated IT resources limit and worsen the use of equipment. Networking makes it possible to consolidate storage, increase equipment utilization, and paves the way for full server and storage virtualization.

Virtualization

The redesign of the network core has made it possible to greatly benefit from the process of virtualization of the server infrastructure and storage infrastructure. Existing resources can be fully consolidated and the common pool of resources becomes available to any applications that need them. Since applications can freely move both within the data center and between data centers, the time of unavailability of services and applications is significantly reduced.

NetApp is actively engaged in the transfer of old servers of its data center into virtual machines, as well as storage consolidation. For example, analysis of the server farm engineering laboratories showed that about 4600 x86 servers can be transferred to virtual machines. All this kitchen consumed about $ 1.4 million per year only for power supply and cooling and occupied 190 cabinets in the data center. The transfer of physical servers to virtual ones with a ratio of 20: 1 led to the fact that all these servers were transferred to 230 host servers of virtual machines, for which we spend only $ 70,000 per year on electricity and cooling, and they are located in only 10 cabinets. The initial phase of one of these company projects, in our engineering laboratory in Bangalore, India, is described in a recent article in Tech OnTap .

Another Tech OnTap article focuses on NetApp's approach to consolidating storage at one of our corporate data centers. The results of the work carried out are as follows:

- Storage utilization increased by an average of 60%.

- The occupied volume was reduced from 24.83 cabinets to 5.48

- 50 storage systems replaced by 10

- Reduced power consumption to 41184 kWh per month

- Received a significant increase in capacity and performance

The general approach is described in our white paper: Reducing power consumption through storage efficiency .

Many NetApp users and clients get similar results in their data centers. For example, a large telecom operator ( BT , approx. Track ) consolidated 3,103 physical servers into 134 virtual machine hosts (23: 1) and increased the use of storage from 25% to 70%, while saving $ 2.25 million in power supply and freeing up 660 cabinets and 8500 network ports. As a result, the investment return time was 8 months, the new servers ordered by the IT service were launched on the same day (against 3-5 days earlier), and the backup time was reduced from 96 hours to less than 30 minutes.

British Telecom datacenters release their datacenters from 75 tons of equipment that has become superfluous during the company's transition to a virtual server environment.

Results from a storage administrator's point of view.

The transition to shared resources and a virtualized ecosystem provides a number of significant results for storage administrators. Since now the resources of servers and storage systems are available from everywhere, including sources from outside the data center, the usual methods of organizing access and work are changing.

Here is what Jessica Yu, the storage systems team at NetApp's IT division, says, describing the benefits based on her own experience:

Benefits of shared resources include:

- Space saving. Flexibility in the allocation of resources, the ability to more efficiently allocate resources across the entire organization, simplify the planning of space consumption, can significantly reduce storage space requirements.

- Ease of administration. Fewer physical servers requiring attention and physical maintenance also simplify the overall infrastructure and reduce maintenance costs. A simpler architecture leads to better standardization, as well as the possibility of a better and more automated process.

- Fault tolerance. Shared data warehouses have redundancy features that allow you to continue working in the event of hardware component failures.

- Improved storage efficiency. Storage virtualization allows us to use thin provisioning technology for all applications. Standardization also led to the ability to use deduplication with higher efficiency rates, since more data that can be deduplicated is stored centrally, can be processed by the deduplication process, and have a greater effect of freeing up free space.

With the use of shared storage, everything is standardized. As a result, we can plan, purchase equipment and implement projects using data warehouses much easier. Previously, we used a numerous "zoo" of various storage systems. At the same time, the planning and growth processes became a real nightmare, devouring both the resources of the data center and the time of the admins:

- Previously, it took weeks to reconcile and shake up storage capacity expansion processes; Now we can solve all our questions on planning the allocation of storage space in the framework of the monthly meeting.

- Previously, we spent days discussing storage structure issues with our project team; now all the tasks of formulating requirements and developing a solution template fit into several hours.

- The task of finding the right amount of space for a task on our various storage systems previously took hours. Now this process is reduced to minutes.

- Our virtualized IT system has made most of the tasks easier to accomplish, so what was previously solved by attracting costly support for level 3 is now decided by level 2 staff.

- Implementing SnapManager for Virtual Infrastructure (SMVI) on our VMware system allowed us to delegate some of the tasks that previously required the attention and participation of storage administrators to the level of server administrators who can now perform tasks such as creating snapshots of their data or restoring data from them.

All this saved us many weeks of man-hours, and allowed us to use the power of the staff more efficiently, with greater efficiency.

findings

Datacenters today are faced with the urgent need to improve the efficiency of using equipment and resources in order to continue to meet the requirements of the business in the face of the stalled growth (and sometimes reduced) of the IT budget, but at the same time the continuing growth of electricity consumption and heat generation of IT equipment. The article outlined some steps using which you can improve the efficiency of using power resources and cooling your data center, as well as increase the use of IT resources.

Dave robbins

Chief Technology Officer for IT

Netapp

Working in IT since 1979, Dave has seen and participated in a variety of technological (p) evolutions in the IT world, which have shaped his understanding of the efficiency aspects of data centers and upcoming cloud technologies.

At NetApp, he is responsible for identifying and selecting new IT technologies, as well as developing a further development plan for the company's IT department.

Ps. While this article was being translated, it was reported that the third generation data center in Research Triangle Park (RTP) , mentioned in the article, launched in 2009, was awarded the first data center with the prestigious EPA Energy Star award for successful implementation of energy-saving technologies and high energy efficiency, gaining 99 out of 100 possible points when evaluated by an EPA approved method.

This data center occupies more than 12 thousand square meters of area, contains 2160 cabinets with equipment, it is 80% more energy efficient, and it cost less to create a third cheaper than the “industry average” data center.

Source: https://habr.com/ru/post/100074/

All Articles